The post AI Is Writing the Next Wave of Software Vulnerabilities — Are We “Vibe Coding” Our Way to a Cyber Crisis? appeared first on RunSafe Security.

]]>For decades, cybersecurity relied on shared visibility into common codebases. When a flaw was found in OpenSSL or Log4j, the community could respond: identify, share, patch, and protect.

AI-generated code breaks that model. Instead of re-using an open source component and having to comply with license restrictions, one can use AI to rewrite a near similar version but not use the exact open source version.

I recently attended SINET New York 2025, joining dozens of CISOs and security leaders to discuss how AI is reshaping our threat landscape. One key concern surfaced repeatedly: Are we vibe coding our way to a crisis?

Listen to the Audio Overview

Losing the Commons of Vulnerability Intelligence

At the SINET New York event, Tim Brown, VP Security & CISO at SolarWinds, pointed out that with AI coding, we could lose insights into common third-party libraries.

He’s right. If every team builds bespoke code through AI prompts, including similar to but different than open source components, there’s no longer a shared foundation. Vulnerabilities become one-offs. If we are not using the same components, we won’t have the ability to share vulnerabilities. And that could lead to a situation where you have a vulnerability in your product that somebody else won’t know they have.

The ripple effect is enormous. Without shared components, there’s no community-driven detection, no coordinated patching, and no visibility into risk exposure across the ecosystem. Every organization could be on its own island of unknown code.

AI Multiplies Vulnerabilities

Even more concerning, AI doesn’t “understand” secure coding the way experienced engineers do. It generates code based on probabilities and its training data. A known vulnerability could easily reappear in AI-generated code, alongside any new issues.

Veracode’s 2025 GenAI Code Security Report found that “across all models and all tasks, only 55% of generation tasks result in secure code.” That means that “in 45% of the tasks the model introduces a known security flaw into the code.”

For those of us at RunSafe, where we focus on eliminating memory safety vulnerabilities, that statistic is especially concerning. Memory-handling errors — buffer overflows, use-after-free bugs, and heap corruptions — are among the most dangerous software vulnerabilities in history, behind incidents like Heartbleed, URGENT/11, and the ongoing Volt Typhoon campaign.

Now, the same memory errors could appear in countless unseen ways. AI is multiplying risk one line of insecure code at a time.

Signature Detection Can’t Keep Up

Nick Kotakis, former SVP and Global Head of Third-Party Risk at Northern Trust Corporation, underscored another emerging problem: signature detection can’t keep up with AI’s ability to obfuscate its code.

Traditional signature-based defenses depend on pattern recognition — identifying threats by their known fingerprints. But AI-generated code mutates endlessly. Each new build can behave differently and conceal new attack vectors.

In this environment, reactive defenses like signature detection or rapid patching simply can’t scale. By the time a signature exists, the exploit may already have evolved.

Tackling the Memory Safety Challenge

So how do we protect against vulnerabilities that no one has seen — and may never report?

At RunSafe, we focus on one of the most persistent and damaging categories of software risk: memory safety vulnerabilities. Our goal is to address two of the core challenges introduced by AI-generated code:

- Lack of standardization, as every AI-written component can be unique

- No available patches, as many vulnerabilities may never be disclosed

By embedding runtime exploit prevention directly into applications and devices, RunSafe prevents the exploitation of memory-based vulnerabilities, including those that are unknown or zero days.

That means even before a patch exists, and even before a vulnerability is discovered, RunSafe Protect keeps code secure whether it’s written by humans, AI, or both.

Building AI Code Safely

AI-generated code is here to stay. It has the potential to speed up development, lower costs, and unlock new capabilities that would have taken teams months or years to build manually.

However, when every product’s codebase is unique, traditional defenses — shared vulnerability intelligence, signature detection, and patch cycles — can’t keep up. The diversity that makes AI powerful also makes it unpredictable.

That’s why building secure AI-driven systems requires a new mindset that assumes vulnerabilities will exist and designs in resilience from the start. Whether it’s runtime protection, secure coding practices, or proactive monitoring, security must evolve alongside AI.

At RunSafe, we’re focused on one critical piece of that puzzle, protecting software from memory-based exploits before they can be weaponized. As AI continues to redefine how we write code, it’s our responsibility to redefine how we protect it.

Learn more about Protect, RunSafe’s code protection solution built to defend software at runtime against both known and unknown vulnerabilities long after the last patch is available.

The post AI Is Writing the Next Wave of Software Vulnerabilities — Are We “Vibe Coding” Our Way to a Cyber Crisis? appeared first on RunSafe Security.

]]>The post Fixing OT Security: Why Memory Safety and Supply Chain Visibility Matter More Than Ever appeared first on RunSafe Security.

]]>

What Makes OT Security So Hard to Fix?

Unlike traditional IT, OT systems power critical infrastructure like energy grids, water management, manufacturing floors, and more. These devices often run on low-powered hardware with long lifespans and were never designed for modern connectivity. They were secured by locked doors, not firewalls.

Fast forward to today, and these devices are increasingly connected to the internet—and exposed.

Memory Safety: Still the Achilles’ Heel

Many vulnerabilities in common OT products are caused by buffer overflows or memory corruption flaws. While systemic, these vulnerabilities can be proactively addressed with memory safety protections.

“If you can eliminate entire classes of vulnerabilities before software hits the field, you don’t need to play whack-a-mole with patches,” says Saunders.

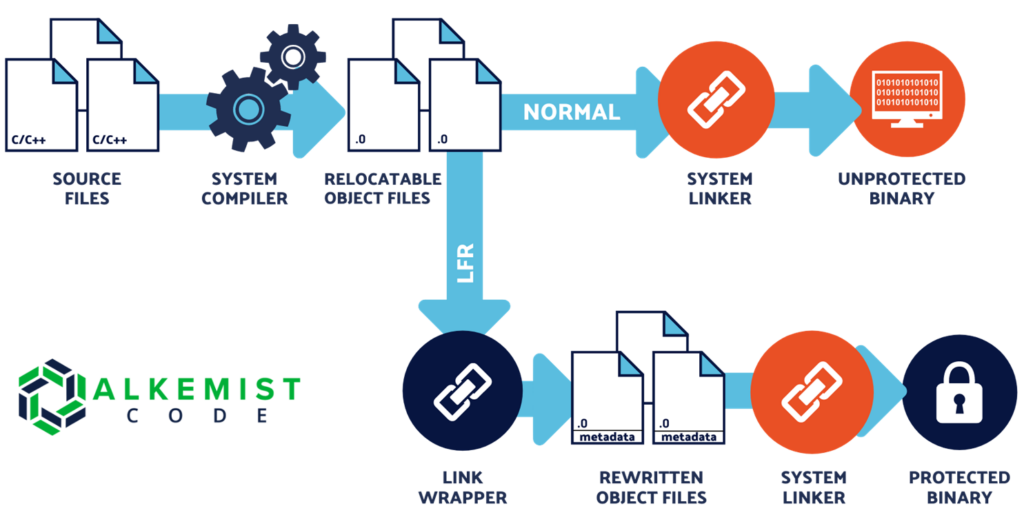

RunSafe’s approach focuses on preventing exploitation at the binary level, effectively making vulnerabilities non-exploitable without requiring post-deployment patching.

The Growing Complexity of the OT Software Supply Chain

Even the simplest industrial device could include thousands of open-source software components. Without visibility into the Software Bill of Materials (SBOM), organizations are left guessing about what’s inside.

“If a vendor can’t tell you what’s in their product, chances are, they don’t know either,” says Saunders.

Knowing your software’s components—and their vulnerabilities—is critical for compliance. It’s also critical for managing risk across the supply chain, identifying attack surfaces, and making smart, prioritized decisions.

Why Patching Alone Isn’t Enough

Patching in OT isn’t like clicking “update” on your phone. It can require physical access to remote locations and months of planning. Worse, many vulnerabilities go unpatched for 180+ days, leaving critical infrastructure exposed for far too long.

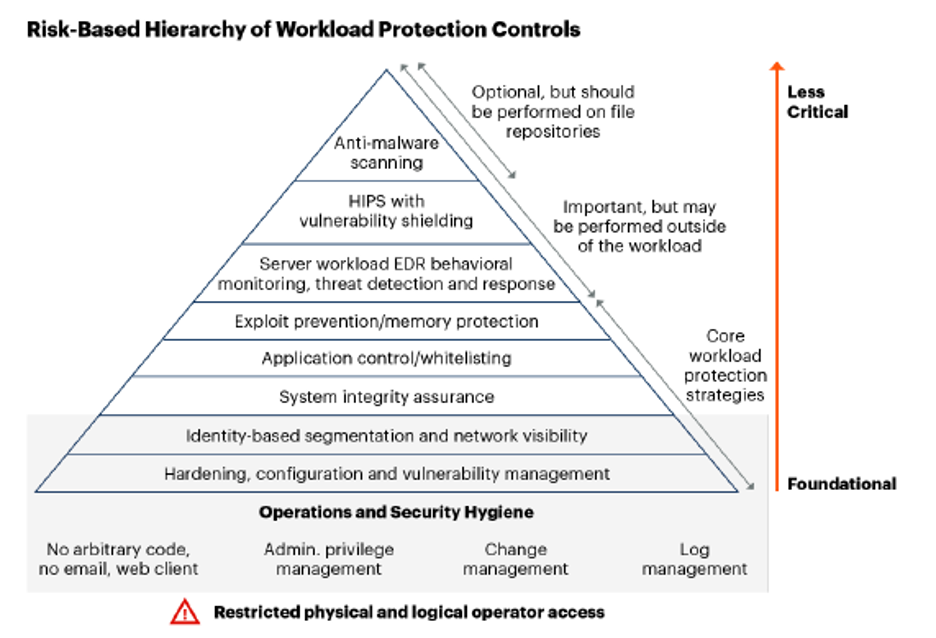

This makes proactive protection methods—like RunSafe’s memory randomization techniques and runtime protection—essential tools in a modern OT defense strategy.

What Should Product Manufacturers and Asset Owners Do Now?

Joe Saunders outlines a simple yet powerful framework:

For OT Product Manufacturers:

- Generate a complete SBOM for each product.

- Identify known vulnerabilities.

- Prioritize fixes based on impact and exploitability.

- Adopt Secure by Design tools that eliminate entire classes of vulnerabilities at build time.

For Asset Owners:

- Request SBOMs from all vendors.

- Analyze vulnerabilities across all systems—from HVAC to power to physical access.

- Demand security transparency and memory safety protections from suppliers.

This shift toward accountability and visibility reduces operational costs and futureproofs infrastructure.

Regulation, Risk, and the Path Forward

Fixing OT security won’t happen with checklists and wishful thinking. It’ll take:

- Regulation that incentivizes change, like the Cyber Resilience Act

- Automation that scales patchless security

- Shared responsibility across the ecosystem

“The real question isn’t whether we can fix OT security,” Saunders concludes. “It’s whether we want to—and who’s willing to lead the charge.”

The post Fixing OT Security: Why Memory Safety and Supply Chain Visibility Matter More Than Ever appeared first on RunSafe Security.

]]>The post Reducing Your Exposure to the Next Zero Day: A New Path Forward appeared first on RunSafe Security.

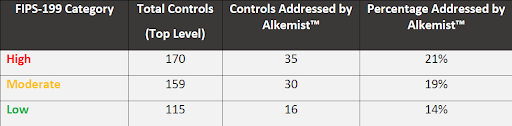

]]>Our goal at RunSafe is to give defenders a leg up against attackers, so we wondered: What if we could quantify this seemingly unquantifiable risk? What if we could take meaningful action to implement zero-day protection for systems before vulnerabilities are even discovered?

To dig into these questions, we partnered with Ulf Kargén, Assistant Professor at Linköping University, who developed the CReASE (Code Reuse Attack Surface Estimation) tool, which underpins RunSafe’s Risk Reduction Analysis.

Quantifying the Unquantifiable: A New Approach to Zero-Day Risk

VulnCheck, in their “2024 Trends in Vulnerability Exploitation” report, found that 23.6% of all actively exploited vulnerabilities in 2024 were zero-day flaws. We’re seeing nation-state actors like Volt Typhoon and Salt Typhoon specifically target these unknown vulnerabilities to achieve their objectives, as noted in research from Google Threat Intelligence Group, which tracked 75 zero-day vulnerabilities exploited in the wild in 2024.

Most of the industry’s response to zero days has been trying to detect and prevent threats by looking for indicators of attack, suspicious behavior, and patterns that might tip us off. But attackers have gotten really good at hiding and masking their activity. What’s been left wide open is the underlying risks in software itself. Instead of securing the foundation, we’ve built bigger walls around our systems.

That might work in a data center where systems live behind firewalls and racks of gear. But in the world of IoT and embedded devices, there are no walls. These systems are deployed far from the protection of the network where they are alone, exposed, and vulnerable. They need to be self-reliant. They need to be like samurai—able to defend themselves without backup.

Because of this, we saw the need for a method to quantify the risk of zero days and a way to make devices intrinsically more robust against exploitation, regardless of what vulnerabilities might exist within them. If you can quantify risk with real technical rigor, you can make smart decisions to reduce your attack surface and make a compelling argument to leadership on where to focus resources.

Return-Oriented Programming: Understanding the Threat

Modern cyberattacks frequently use a technique called Return-Oriented Programming (ROP). When traditional code injection attacks became difficult due to improved security measures, attackers evolved to use “code reuse” attacks instead.

Modern exploits repurpose a program’s own code, using existing code snippets (called “gadgets”) within a program and chaining them together to create malicious functionality. The program’s own code is weaponized against itself.

This insight gives us a way to measure memory-based zero-day risk specifically. While it’s impossible to predict all potential vulnerabilities in code, we can analyze whether useful ROP chains exist in a binary that could lead to the successful exploitation of a vulnerability.

Quantifying Zero-Day Risk with CReASE

We worked alongside researcher Ulf Kargén at Linköping University who developed the Code Reuse Attack Surface Estimation (CReASE) tool to quantify previously unmeasurable risk. You can listen to Ulf discuss the tool and how it works in this webinar.

CReASE scans binaries to identify potential ROP gadgets and determines whether they could be chained together to perform dangerous system calls. It doesn’t try to predict where specific vulnerabilities might exist but instead analyzes whether the code structure would allow successful exploitation if a vulnerability were discovered.

It answers the question: Are any useful ROP chains available to an attacker?

Unlike existing tools that focus on guaranteeing working exploit chains (often sacrificing scalability or completeness), CReASE uses novel data flow analysis to achieve both scalability and completeness comparable to a human attacker.

The result is a risk scoring system that quantifies the probability that the next memory-based zero-day vulnerability could be exploited to achieve specific dangerous outcomes like remote code execution, file system manipulation, or privilege escalation.

The CReASE tool underlies RunSafe’s Risk Reduction Analysis, which you can use to analyze your exposure to CVEs and memory-based zero days.

The Memory Safety Challenge

To understand why this approach is so powerful, we need to recognize two critical facts:

- 70% of vulnerabilities in compiled code are memory safety vulnerabilities

- 75% of vulnerabilities used in zero-day exploits are also memory safety vulnerabilities

These numbers tell us that memory safety vulnerabilities constitute a significant risk in our codebases. When a memory vulnerability is exploited, attackers can execute arbitrary code, take control of devices, crash systems, exfiltrate data, or deploy ransomware.

By focusing our risk quantification and mitigation efforts on memory-based vulnerabilities specifically, we’re addressing a common and dangerous attack vector for zero-day exploits.

Memory Randomization: Making Zero-Day Vulnerabilities Inert

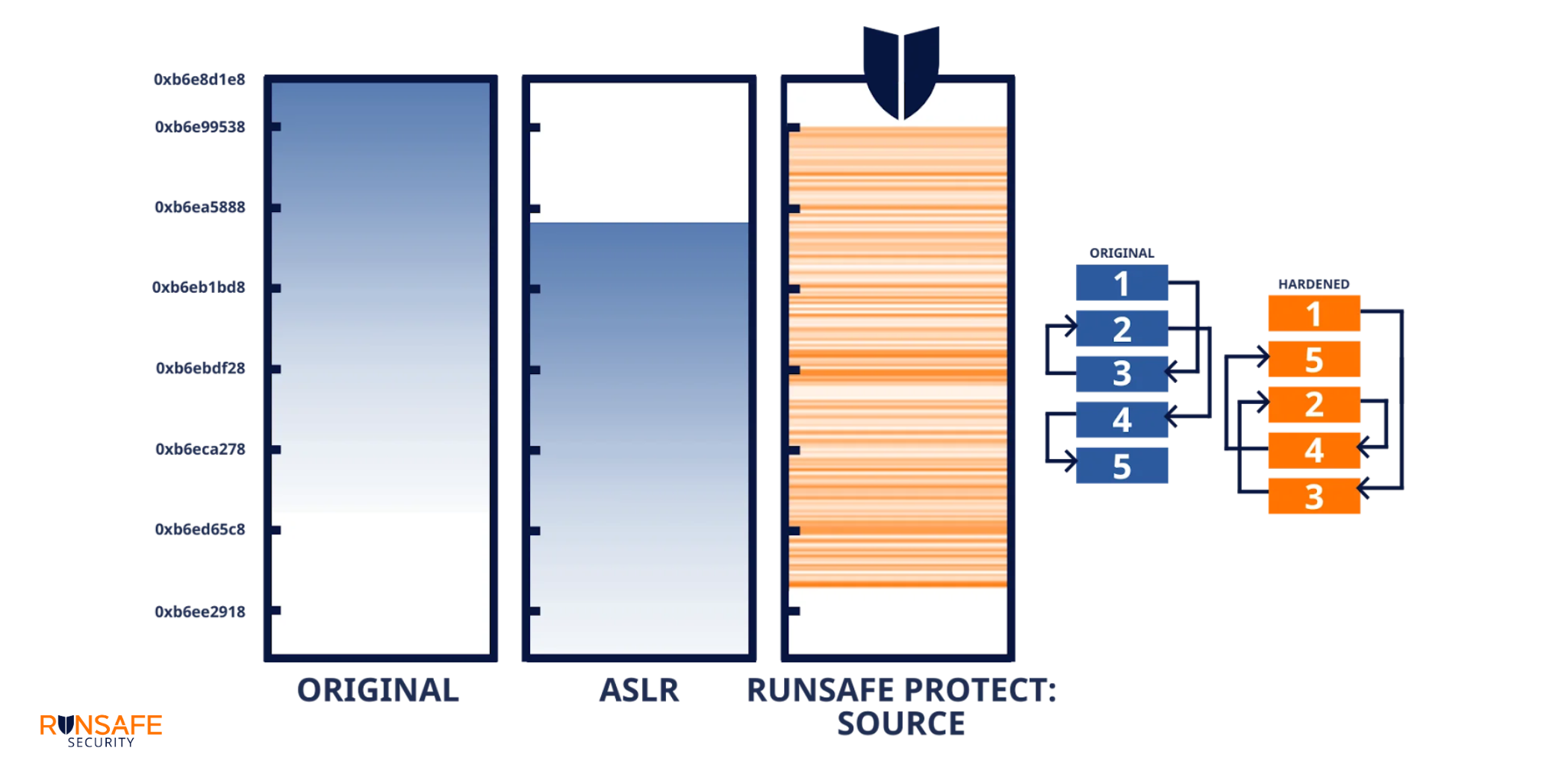

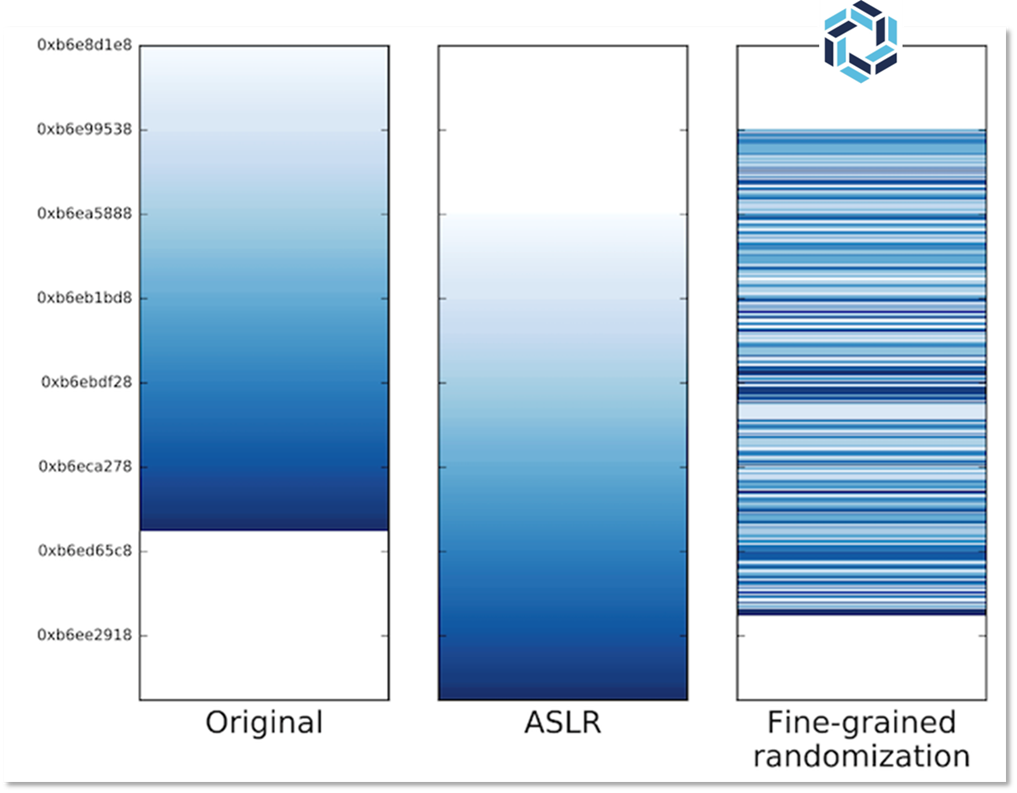

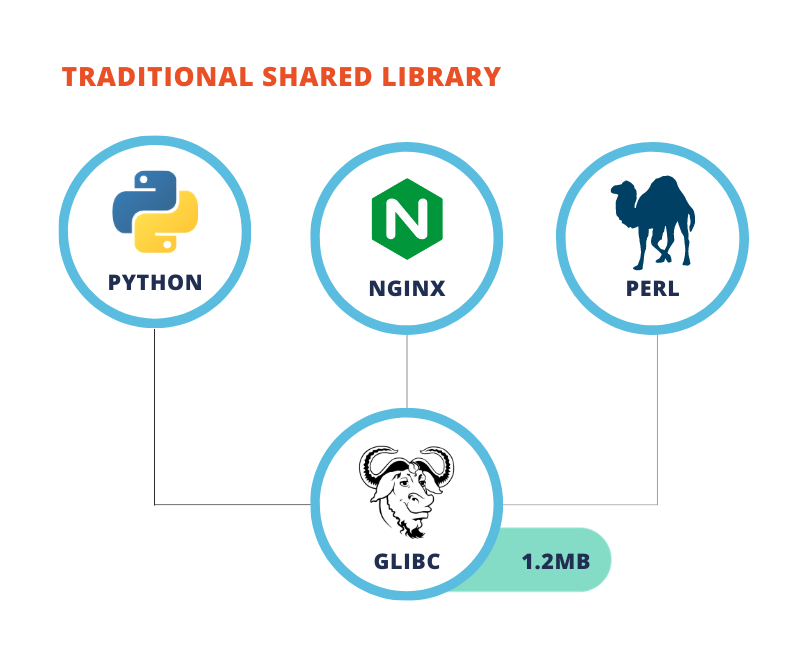

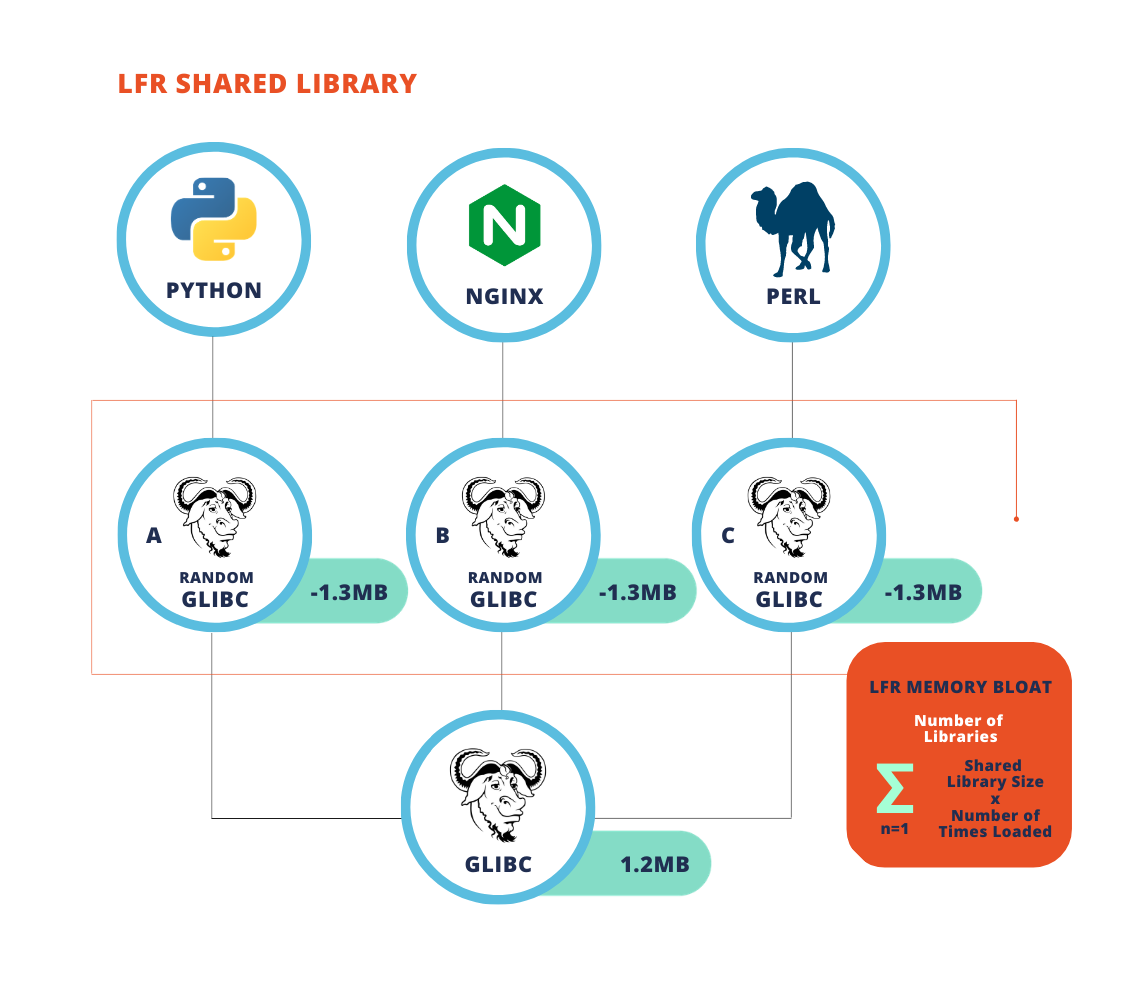

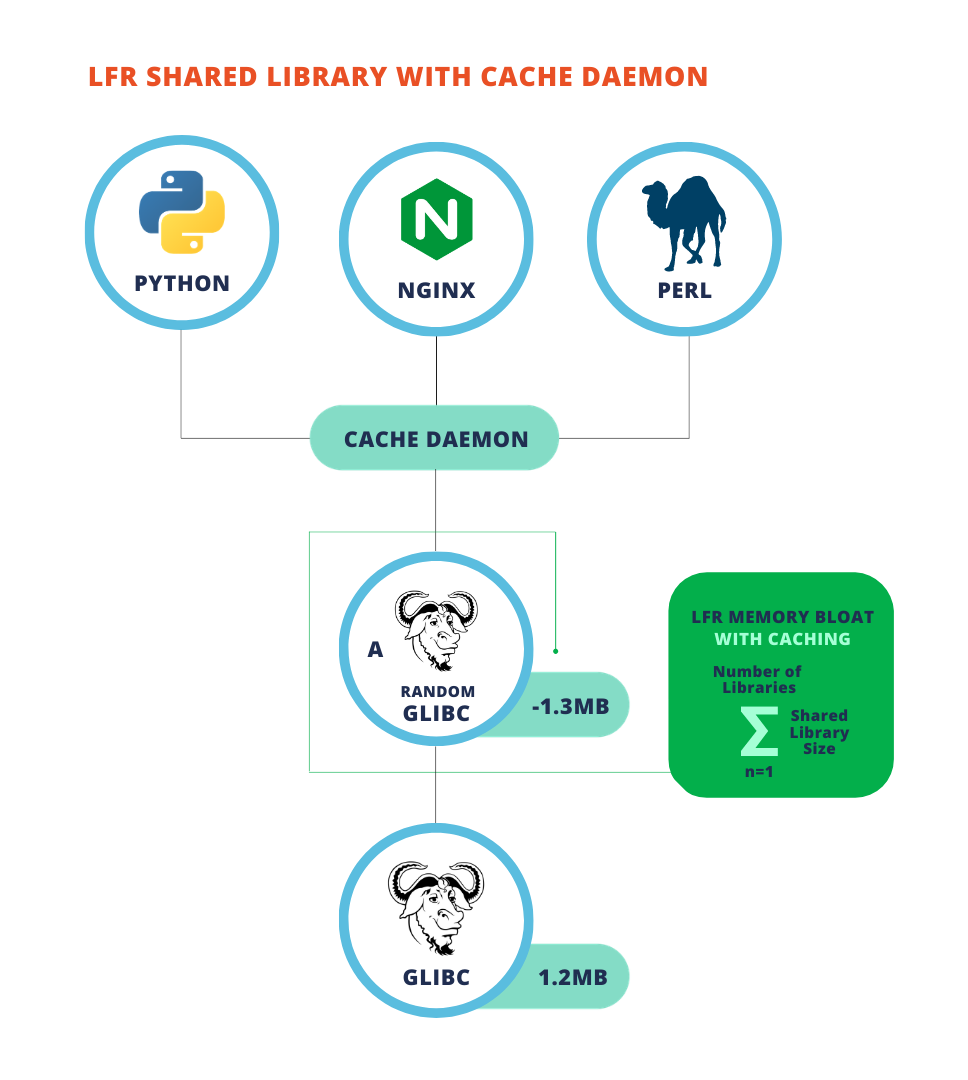

Once we quantify the risk, what can be done about it? Traditional memory protection like Address Space Layout Randomization (ASLR) provides some security by randomizing where blocks of code are loaded in memory. However, ASLR still loads functions contiguously, making it vulnerable to information leak attacks.

RunSafe’s approach takes randomization to the function level. Instead of randomizing where the entire binary loads, we randomize each function independently. In a typical binary with 280 functions, this creates 280 factorial possible memory layouts — more than 10^400 combinations.

Even if a memory-based zero-day vulnerability exists, with RunSafe’s Load-time Function Randomization (LFR), attackers can’t reliably construct a working ROP chain because they can’t predict where the necessary gadgets will be located. We’ve effectively made the vulnerability inert.

Taking Action: Zero-Day Vulnerability Protection

The most effective approach to memory-based zero-day risk combines analysis and protection:

- Analyze your binaries to understand your current risk profile

- Apply function-level randomization to neutralize potential exploits

- Measure the risk reduction to quantify your improved security posture

Our customers typically see a risk reduction that changes the odds from “the next zero-day can compromise the system” to “maybe one in the next 10,000 zero-days might succeed.” That’s a dramatic improvement in security posture.

While no solution can eliminate all types of zero-day vulnerabilities, addressing memory-based vulnerabilities targets the most common and dangerous attack vector. In a world where zero-days will always exist, making them ineffective is the next best thing to eliminating them entirely.

Want to try out the Risk Reduction Analysis tool for yourself? All you’ll need to do is create an account and upload a binary to get your results.

Run an analysis here.

The post Reducing Your Exposure to the Next Zero Day: A New Path Forward appeared first on RunSafe Security.

]]>The post Memory Safety KEVs Are Increasing Across Industries appeared first on RunSafe Security.

]]>In a webinar hosted by Dark Reading, RunSafe Security CTO Shane Fry and VulnCheck Security Researcher Patrick Garrity discussed the rise of memory safety vulnerabilities listed in the KEV catalog and shared ways organizations can manage the risk.

Memory Safety KEVs on the Rise

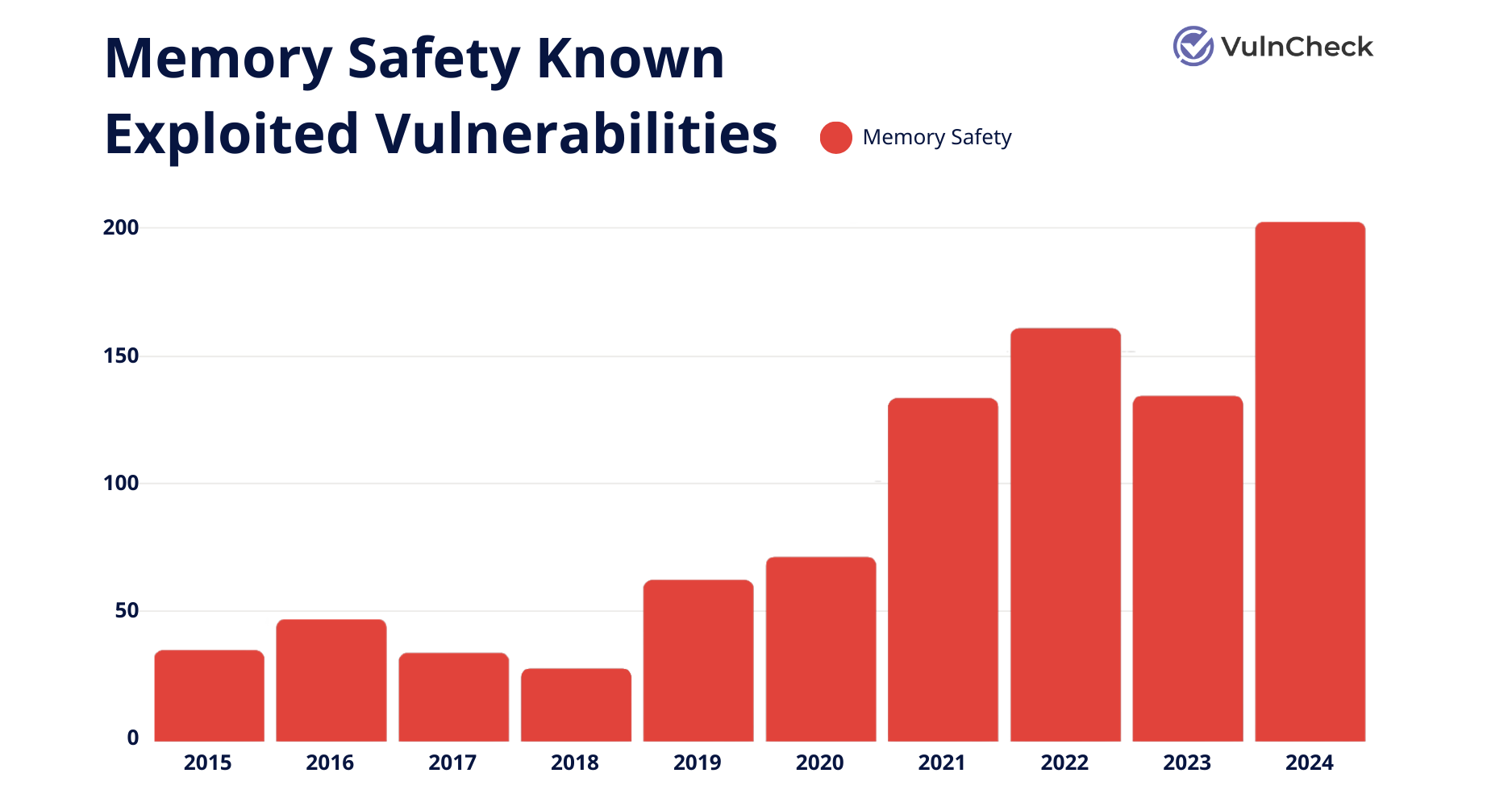

Data from VulnCheck shows a clear increase in memory safety KEVs over the years, reaching a high in 2024 of around 200 total KEVs.

“We’re seeing the number of known exploited vulnerabilities associated with memory safety grow,” Patrick said. “If you look at CISA’s KEV list, the concentration is quite high as far as volume.”

Data from VulnCheck, Memory Saftey Known Exploited Vulnerabilities

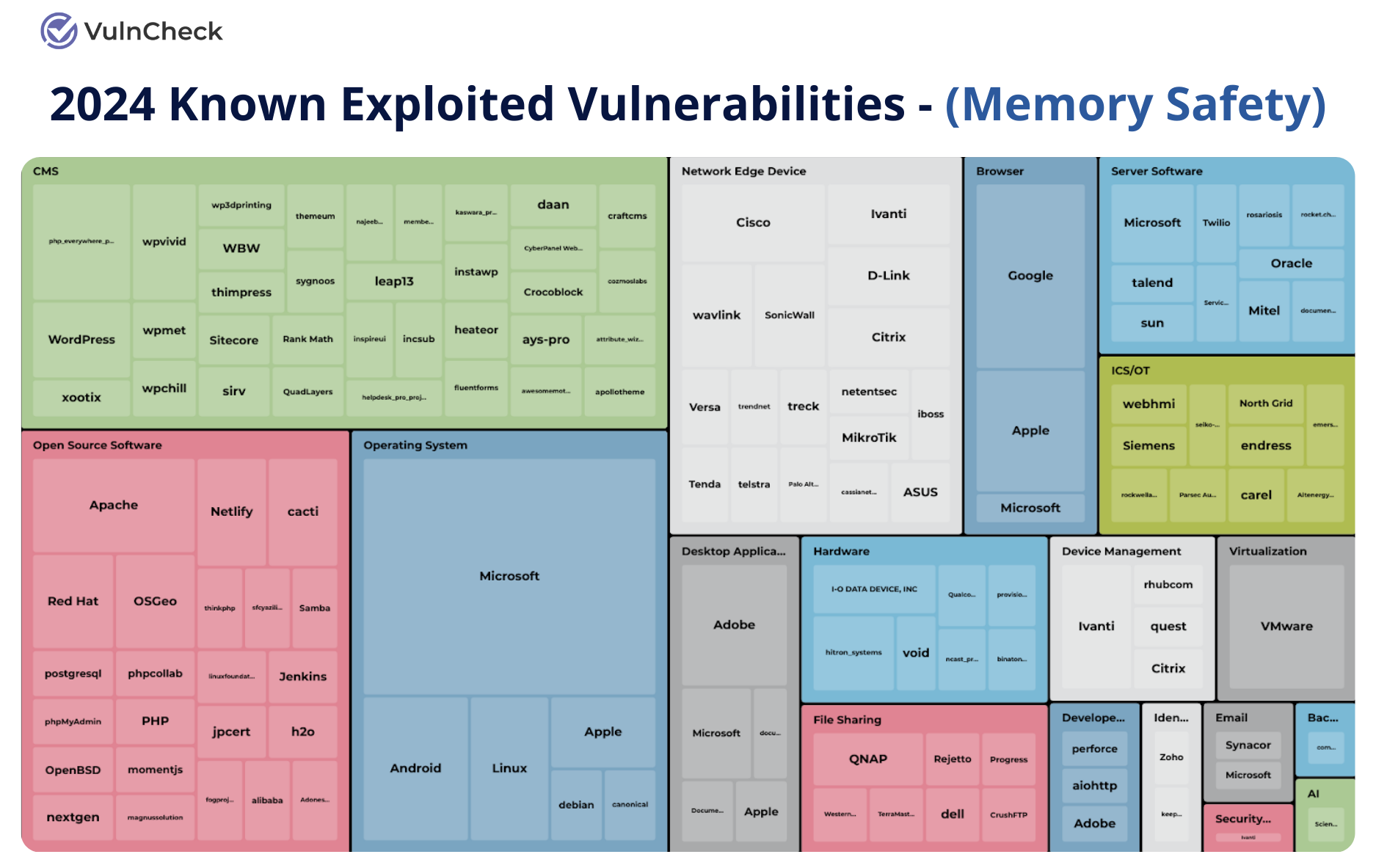

Memory safety KEVs are also found across industries, including network edge devices, hardware and embedded systems, industrial control systems (ICS/OT), device management platforms, operating systems, and open source software.

Data from VulnCheck, Memory Saftey Known Exploited Vulnerabilities by Industry

Patrick emphasized the universal nature of the threat: “If you look at this list, there’s manufacturing impacted, medical devices, embedded systems, and critical infrastructure. Across the board from an industry perspective, you’re going to see these vulnerabilities everywhere.”

There’s A Lot of KEVs, So What?

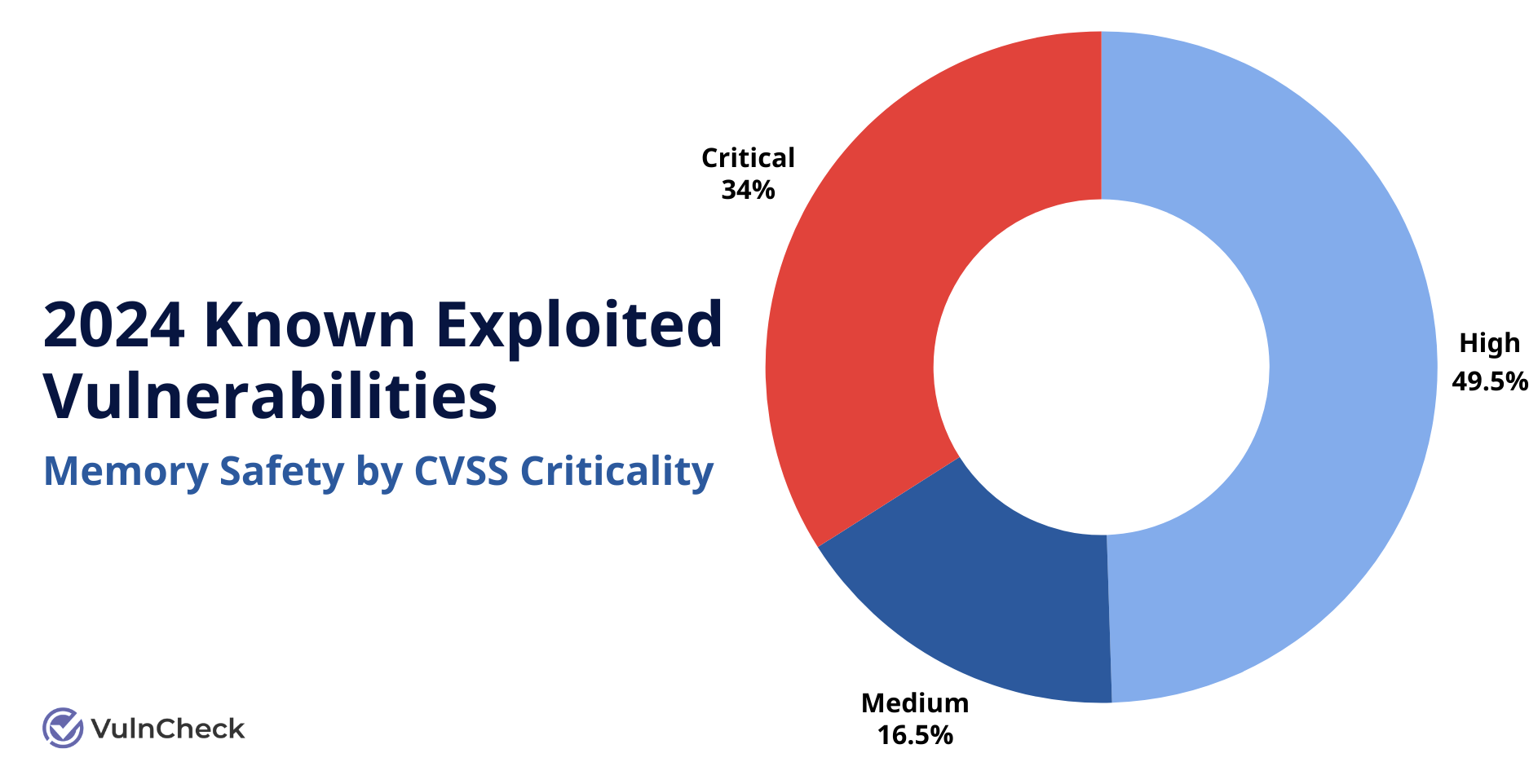

Not only are memory safety KEVs widespread, many are also classified as critical, with high CVSS scores. Six memory safety weakness types are now included in MITRE’s list of the top 25 most dangerous software weaknesses for 2024.

Data from VulnCheck, Memory Saftey Known Exploited Vulnerabilities by CVSS Criticality

Memory safety vulnerabilities—like buffer overflows, use-after-free bugs, and out-of-bounds writes—have long plagued compiled code. “About 70% of the vulnerabilities in compiled code are memory safety related,” explained Shane Fry.

When attackers exploit these bugs, the results can be severe. Organizations may face:

- Arbitrary code execution

- Remote control of devices

- Denial of service

- Privilege escalation

- Data exposure and theft

Real-World Exploits: What the Data Tells Us

Ivanti Connect Secure (CVE-2025-0282)

This KEV, an out-of-bounds write (CWE-787), affected several Ivanti products and was linked to the Hafnium threat actor group. Patrick called out the speed at which this vulnerability moved from discovery to exploitation: “The vendor identifies there’s a vulnerability, there’s exploitation, they disclose the vulnerability, they get it in a CVE, and then CISA adds it—all in the same day.”

Typically, the disclosure process does not flow so quickly, but in this case it was a good thing as the exploit targeted a security product. Shane observed: “One of the very interesting philosophical questions that I think about often in cybersecurity spaces is how impactful a security vulnerability in a security product can be. Most people think that if it’s a security product, it’s secure. And off they go.”

Siemens UMC Vulnerability (CVE-2024-49775)

A heap-based buffer overflow flaw (CVE-2024-49775) in Siemens’ industrial control systems exposed critical infrastructure to risks of arbitrary code execution and disruption. The vulnerability exemplifies the widespread impact memory safety issues can have across product lines when they affect common components.

Global Recognition of the Growing Risk

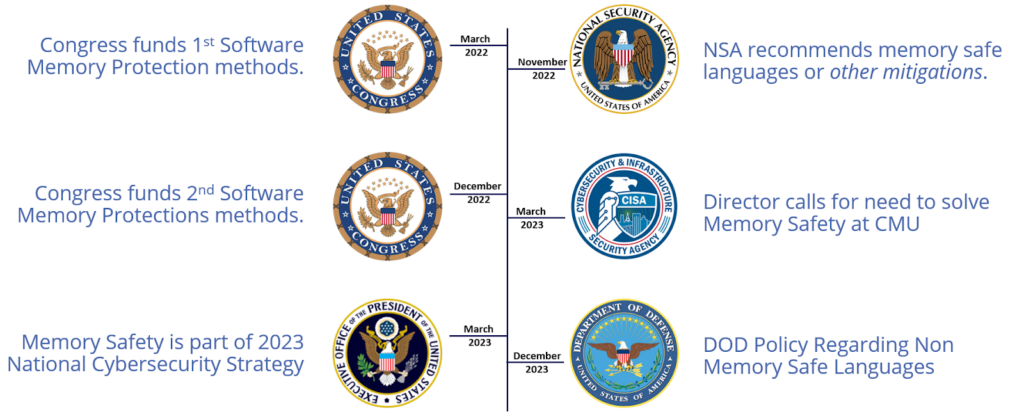

The accelerating growth of memory safety KEVs has not gone unnoticed by global security organizations. In 2022, the National Security Agency (NSA) issued guidance stating that memory safety vulnerabilities are “the most readily exploitable category of software flaws.”

Their guidance recommended two approaches:

- Rewriting code in memory-safe languages like Rust or Golang

- Deploying compiler hardening options and runtime protections

Similarly, CISA has emphasized memory safety in its Secure by Design best practices, advocating for organizations to develop memory safety roadmaps.

The European Union’s Cyber Resiliency Act (CRA) takes a broader approach, emphasizing Software Bill of Materials (SBOM) to help organizations understand vulnerabilities in their supply chain. As Shane noted, “We saw a shift in industry when the CRA became law that, hey, now we have to actually do this. We can’t just talk about it.”

Practical Steps: What Organizations Can Do Now

Given the growing threat landscape, organizations need practical approaches to address memory safety vulnerabilities.

1. Prioritize Critical Code Rewrites

For most companies, a full rewrite in Rust or another memory-safe language isn’t realistic. Instead, start by identifying high-risk, externally facing components and consider targeted rewrites. Shane suggested starting with software or devices that most often interact with untrusted data.

2. Adopt Secure by Design Practices

Implementing secure development practices can help prevent introducing new vulnerabilities.

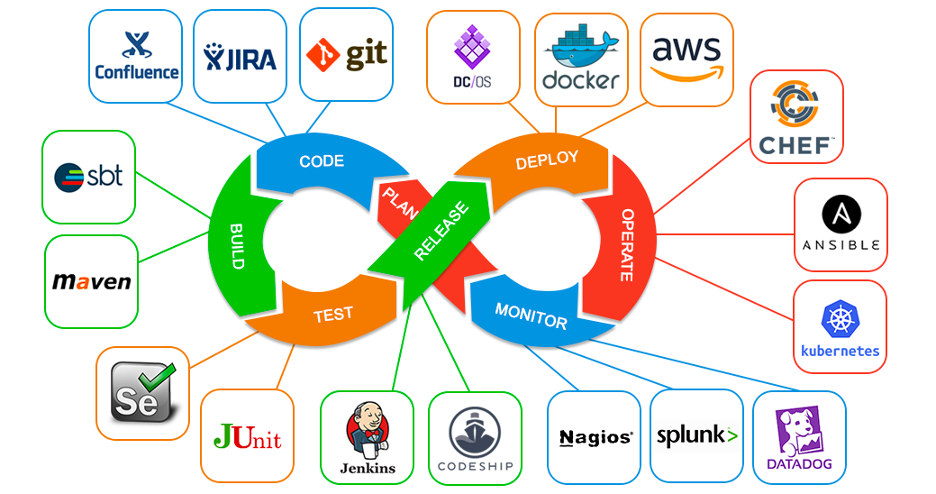

“There’s a lot of aspects of Secure by Design, like code scanning and secure software development life cycles and Software Bill of Materials, that can help you understand what you’re shipping in your supply chain,” Shane said.

3. Use Runtime Protections

Runtime hardening is an effective defense for legacy or third-party code that can’t be rewritten. Runtime protections prevent the exploit of memory safety vulnerabilities by randomizing code to prevent attackers from reliably targeting vulnerabilities.

RunSafe accomplished this with our Protect solution. “Every time the device boots or every time your process is launched, we reorder all the code around in memory,” Shane said.

It also buys time, allowing organizations to avoid having to ship emergency patches overnight because their software is already protected.

Memory Safety Is Everyone’s Problem

Memory safety vulnerabilities are becoming more common across industries. The risks are serious, especially when attackers can use these flaws to take control of systems or steal data.

Organizations need to take action now. By rewriting the highest-risk code, following secure development practices, and using runtime protections where needed, companies can reduce their exposure to memory safety threats.

Memory safety problems are widespread, but they can be managed. Secure by Design practices and runtime protections offer a path forward for more secure software and greater resilience.

The post Memory Safety KEVs Are Increasing Across Industries appeared first on RunSafe Security.

]]>The post Converting C++ to Rust: RunSafe’s Journey to Memory Safety appeared first on RunSafe Security.

]]>At RunSafe Security, I had the opportunity to lead the transition of our 30k lines of C++ codebase to Rust. Two things influenced our decision:

- RunSafe wanted to address C++ memory safety concerns, and signed CISA’s Secure by Design Pledge, which includes transitioning to memory safe languages.

- We believe that security software, which RunSafe provides, should be held to a higher level of scrutiny and correctness guarantees. For us, that meant choosing the right programming language was as important as the code itself.

The transition wasn’t without its challenges. Converting a large, established C++ codebase to Rust required careful planning, creative problem-solving, and plenty of patience. In this blog, I’ll walk you through why we chose to make the switch, the obstacles we encountered along the way, and the results we achieved. I hope these insights provide value to anyone considering a similar journey.

What Motivated the Conversion of C++ to Rust?

RunSafe chose the Rust programming language because of several advantages it offers.

- No undefined behavior

- Stronger type system

- Memory safety guarantees

- No data races

The most important advantages from our perspective were the combination of memory safety and lack of garbage collection.

Rust’s advantages extended beyond security. We also saw opportunities for:

- Cleaner, more reliable code

- Stronger compile-time guarantees

- Better performance optimization without the overhead of garbage collection

Challenges in Converting to Rust

Migrating a C++ codebase to Rust is not a decision without obstacles. For RunSafe, challenges stemmed from both technical limitations and philosophical differences in how C++ and Rust approach certain concepts.

Code and Library Compatibility Issues

- Templating vs. Generics: While C++ templates offer flexibility, they don’t cleanly map to Rust’s generic programming model, requiring manual adaptation.

- Standard Library Dependencies: Given RunSafe’s need to avoid libc dependencies for enhanced security, Rust’s #[no_std] environment became necessary. This stripped-down configuration added constraints, particularly for syscalls and memory allocations.

Dealing with Mutable State

C++ often permits unrestricted mutability, allowing developers to directly manipulate global state. Rust’s borrow checker, which enforces ownership rules, fundamentally rejects this assumption. Overcoming this required rewriting significant portions of the codebase to adhere to Rust’s stricter ownership principles.

Platform Support

C++’s compatibility with a wide range of platforms, including esoteric ones, is unmatched. Rust’s smaller ecosystem and more limited targets presented a hurdle when considering some low-level platforms that RunSafe’s software relied on.

Steps to Transition Your Code from C++ to Rust

If you’re considering converting a C++ codebase to Rust, I recommend taking a structured approach to cover all your bases. Here’s how RunSafe managed the transition:

Step 1. Evaluate and Prepare

- Review and improve test coverage to ensure consistent behavior after migration.

- Document core functionalities and interfaces clearly to plan the transition effectively.

Step 2. Plan the Conversion Approach

- Choose between manual or automated conversion. Tools like c2rust offer automated solutions, though the output may require significant refinement to fit idiomatic Rust.

- Implement an incremental conversion strategy where sections are rewritten piecemeal, avoiding wholesale changes that could introduce larger risks.

Step 3. Build a Skeleton in Rust

- Start by creating structural outlines (e.g., functions, modules) in Rust, leaving placeholders like unimplemented!() where details are pending.

- This early step helps identify ownership or mutability conflicts that need resolution.

Step 4. Refactor and Optimize

- Use Rust-specific tools like clippy to make your code cleaner and more idiomatic.

- Replace sentinel values with Rust constructs like Option or Result for better error handling.

Outcomes: What RunSafe Found

Overall, we found the transition to Rust to be successful. Key outcomes included:

- Timing: Took one engineer about 3 months of conversion time for 30k lines of code and an additional 3 months of ironing out integration bugs on esoteric platforms.

- Bugs: We found many bugs, including an incorrect argument type ported from a C++ syscall that caused visible stack corruption failures in Rust when optimized and C++ Case statements that “fell through” when they should have produced a value like Rust’s match statement.

- Size: We saw a 35% reduction in file sizes overall.

- Performance: Initially, we saw 2x slower than C++ implementation, but used profiling to lead us to targets that we would have otherwise not seen. After addressing these issues, Rust speed was roughly on par with C++.

- Safety: Required some unsafe code, which is now all behind safe abstractions.

- Good Surprises: We were confident in refactoring during this process. Moving, editing, and restructuring is substantially easier to verify when everything is more strictly checked, though it may require more effort upfront.

Should You Rewrite Your Code in Rust?

The answer depends on how critical memory safety is to your project and how often you find yourself chasing bugs caused by undefined or unreliable behavior in C++. The key question is: how much of your code actually needs rewriting?

Here are some critical factors to consider:

- Project Scope: How much of your codebase is critical enough to warrant the transition? Small, security-critical sections may provide the greatest ROI.

- Development Resources: Do your developers have experience with Rust, or will training be needed?

- Compatibility Needs: If your software targets esoteric platforms supported by C++, this may limit Rust’s viability.

- Cost and Timeline: Migration requires upfront investment, but the long-term benefits of reduced debugging and increased security often justify the effort.

Rewriting an entire million-line codebase isn’t realistic—it’s neither cost-effective nor time-efficient. However, you might identify smaller, high-risk sections of your codebase that are prone to memory safety issues. Even rewriting small portions of critical code can be enough to reduce your bug surface area.

The post Converting C++ to Rust: RunSafe’s Journey to Memory Safety appeared first on RunSafe Security.

]]>The post Understanding Memory Safety Vulnerabilities: Top Memory Bugs and How to Address Them appeared first on RunSafe Security.

]]>

Memory safety vulnerabilities remain one of the most persistent and exploitable weaknesses across software. From enabling devastating cyberattacks to compromising critical systems, these vulnerabilities present a constant challenge for developers and security professionals alike.

Both the National Security Agency (NSA) and the Cybersecurity and Infrastructure Security Agency (CISA) have emphasized the importance of addressing memory safety issues to defend critical infrastructure and stop malicious actors. Their guidance highlights the risks associated with traditional memory-unsafe languages, such as C and C++, which are prone to issues like buffer overflows and use-after-free errors.

In February 2025, CISA drilled down even deeper with their guidance, issuing an alert on “Eliminating Buffer Overflow Vulnerabilities.”

Why do memory corruption vulnerabilities still exist, how do they manifest in practice, and what strategies can organizations implement to mitigate their risks effectively? Let’s take a look.

What Are Memory Safety Vulnerabilities?

Memory safety vulnerabilities occur when a program performs unintended or erroneous operations in memory. These issues can lead to dangerous consequences like data corruption, unexpected application behavior, or even full system compromise. Common Weakness Enumeration (CWEs), a body of knowledge tracking software vulnerabilities, highlights these as some of the most severe weaknesses in software today.

Memory safety issues are inherently tied to programming languages and runtime environments. Languages like C and C++ offer control and performance but lack built-in memory safety mechanisms, making them more prone to such vulnerabilities.

Attackers leverage memory corruption vulnerabilities as access points to infiltrate systems, exploit weaknesses, and execute malicious actions. Addressing memory vulnerabilities is essential for safety and security, especially for industries like critical infrastructure, medical devices, aviation, and defense.

Types of Memory Safety Vulnerabilities

There are many different types of memory safety vulnerabilities, but there are particular ones that developers and security professionals should understand. The six explained below are listed on the 2024 Common Weakness Enumeration (CWE™) Top 25 Most Dangerous Software Weaknesses list (CWE™ Top 25), and are familiar faces on the list from previous years. The CWE Top 25 lists vulnerabilities that are easy to exploit and that have significant consequences.

1. Buffer Overflows (CWE-119)

A buffer overflow occurs when a program writes more data to a buffer than it can safely hold. This overflow can corrupt adjacent memory, potentially leading to crashes, data corruption, or even allowing attackers to execute arbitrary code.

Example of a Buffer Overflow

A notable example of a buffer overflow vulnerability is CVE-2023-4966, also known as “CitrixBleed,” which affected Citrix NetScaler ADC and Gateway products in 2023. This critical flaw allowed attackers to bypass authentication, including multi-factor authentication, by exploiting a buffer overflow in the OpenID Connect Discovery endpoint.

The vulnerability enabled unauthorized access to sensitive information, including session tokens, which could be used to hijack authenticated user sessions. Discovered in August 2023, CitrixBleed was actively exploited by various threat actors, including ransomware groups like LockBit, leading to high-profile attacks such as the Boeing ransomware incident.

This vulnerability highlights the ongoing significance of buffer overflow vulnerabilities in critical infrastructure and the importance of prompt patching and session invalidation to mitigate potential compromises

2. Heap-Based Buffer Overflow (CWE-122)

A heap-based buffer overflow occurs when a program writes more data to a buffer located in the heap memory than it can safely hold. This can lead to memory corruption, crashes, privilege escalation, and even arbitrary code execution by attackers manipulating the heap memory structure.

Example of a Heap-Based Buffer Overflow

An example of a recent critical heap-based buffer overflow is CVE-2024-38812, a vulnerability in VMware vCenter Server, discovered during the 2024 Matrix Cup hacking competition in China. With a CVSS score of 9.8, this flaw allows attackers with network access to craft malicious packets exploiting the DCERPC protocol implementation, potentially leading to remote code execution. This heap overflow vulnerability was initially patched but required a subsequent update to fully address the issue.

3. Use-After-Free Errors (CWE-416)

Use-after-free errors arise when a program continues to use a memory pointer after the memory it points to has been deallocated. This can lead to system crashes, data corruption, or exploitation through arbitrary code execution.

Example of a Use-After-Free Error

CVE-2021-44710 is a critical use-after-free UAF vulnerability discovered in Adobe Acrobat Reader DC, affecting multiple versions. The vulnerability has a CVSS base score of 7.8, indicating its high severity. If successfully exploited, an attacker could potentially execute arbitrary code on the target system, leading to various severe consequences including application denial-of-service, security feature bypass, and privilege escalation.

4. Out-of-Bounds Write (CWE-787)

An out-of-bounds write occurs when a program writes data outside the allocated memory buffer. This can corrupt data, cause crashes, or create vulnerabilities that attackers can exploit.

Example of an Out-of-Bounds Write

CVE-2024-7695 is a critical out-of-bounds write vulnerability affecting multiple Moxa PT switch series. The flaw stems from insufficient input validation in the Moxa Service and Moxa Service (Encrypted) components, allowing attackers to write data beyond the intended memory buffer bounds.

With a CVSS 3.1 score of 7.5 (High), this vulnerability can be exploited remotely without authentication. Successful exploitation could lead to a denial-of-service condition, potentially causing significant downtime for critical systems by crashing or rendering the switch unresponsive.

5. Improper Input Validation (CWE-020)

Improper input validation occurs when a system fails to adequately verify or sanitize inputs before they are processed. This flaw can lead to unintended behaviors, including command injection, buffer overflows, or unauthorized access. Attackers exploit this weakness by crafting malicious inputs, often bypassing security controls or causing system failures. Input validation issues are particularly common in web applications and embedded systems where external data is heavily relied upon.

Example of Improper Input Validation

CVE-2024-5913 is a medium-severity vulnerability affecting multiple versions of Palo Alto Networks PAN-OS software. This improper input validation flaw allows an attacker with physical access to the device’s file system to elevate privileges.

6. Integer Overflow or Wraparound (CWE-190)

Integer overflow or wraparound occurs when an arithmetic operation results in a value that exceeds the maximum (or minimum) limit of the data type, causing the value to “wrap around.” This vulnerability can lead to unpredictable behaviors, such as buffer overflows, memory corruption, or security bypasses. Attackers exploit this weakness by manipulating inputs to trigger overflows, often resulting in system crashes or unauthorized actions. This issue is common in low-level programming languages like C and C++, where integer operations are not inherently checked.

Example of an Integer Overflow

CVE-2022-2329 is a critical vulnerability (CVSS 3.1 Base Score: 9.8) affecting Schneider Electric’s Interactive Graphical SCADA System (IGSS) Data Server versions prior to 15.0.0.22074. This Integer Overflow or Wraparound vulnerability can cause a heap-based buffer overflow, potentially leading to denial of service and remote code execution when an attacker sends multiple specially crafted messages. Schneider Electric released a patch to address this vulnerability.

Memory CVEs Affecting Critical Infrastructure

Recently, nation-state actors, like the Volt Typhoon campaign, have demonstrated the potential real-world impact of memory safety vulnerabilities in the software used to run critical infrastructure.

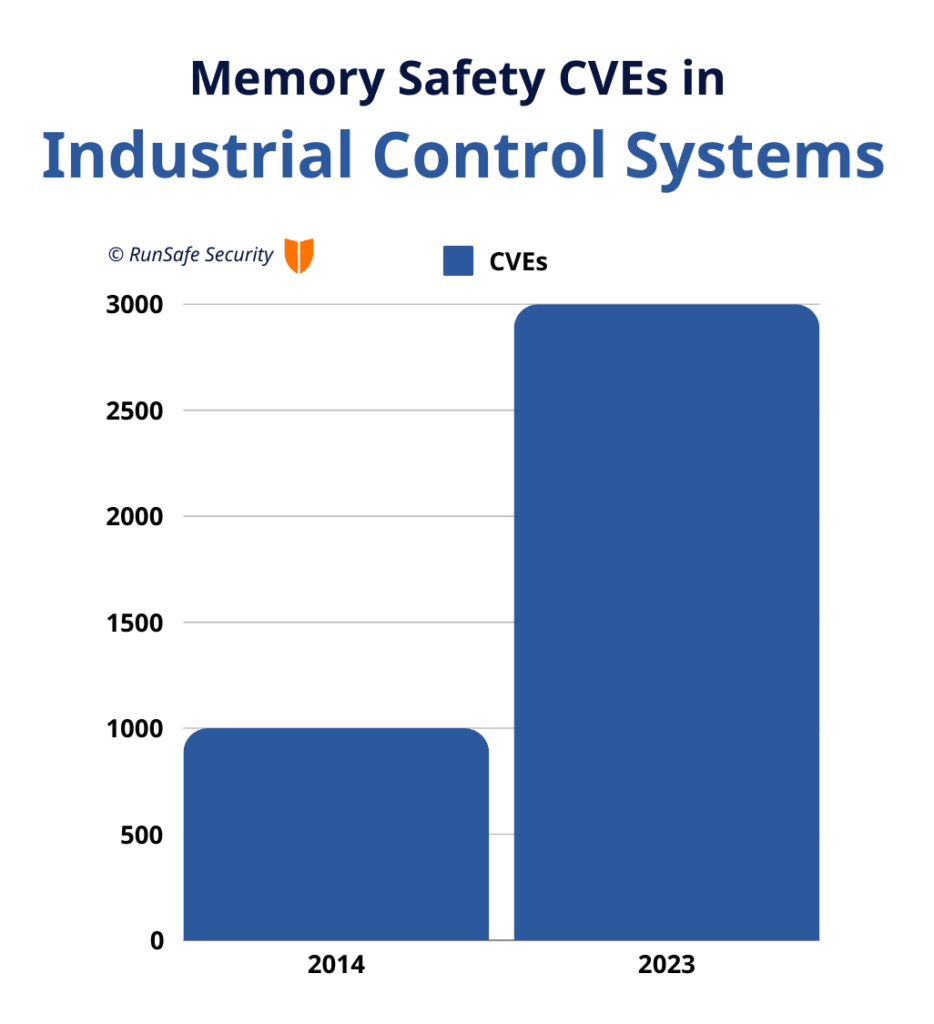

Additionally, in the last few years, memory safety vulnerabilities within ICS have seen a steady upward trend. There were less than 1,000 CVEs in 2014 but nearly 3,000 in 2023 alone.

Here are a few examples of memory safety vulnerabilities directly impacting critical infrastructure.

Ivanti Connect Secure Flaw

A zero-day vulnerability (CVE-2025-0282) in Ivanti’s Connect Secure appliances allowed remote code execution, enabling malware deployment on affected devices.

Siemens UMC Vulnerability

A heap-buffer overflow flaw (CVE-2024-49775) in Siemens’ industrial control systems exposed critical infrastructure to risks of arbitrary code execution and disruption.

Mercedes-Benz Infotainment System

Over a dozen vulnerabilities in the Mercedes-Benz MBUX system could allow attackers with physical access to disable anti-theft measures, escalate privileges, or compromise data.

Rockwell Automation Vulnerability

A denial-of-service and possible remote code execution vulnerability (CVE-2024-12372) in Rockwell Automation’s PowerMonitor 1000 Remote product. This heap-based buffer overflow could compromise system integrity.

Why Addressing Memory Vulnerabilities Is Critical

Memory vulnerabilities represent a significant share of software-based attacks. According to a study by CISA, two-thirds of vulnerabilities in compiled code stem from memory safety issues. These vulnerabilities can impact industries that depend heavily on legacy systems written in C and C++—industries like aerospace, manufacturing, and energy infrastructure.

Key Risks Posed by Memory Vulnerabilities:

- Remote Control Exploits: Attackers can hijack systems, gaining full control over operations.

- Data Breaches: Sensitive information can be corrupted, stolen, or erased.

- Operational Downtime: System instability leads to interruptions in critical services.

- Compliance Failures: Organizations risk fines for failing to meet cybersecurity regulations.

Best Practices for Mitigating Memory Vulnerabilities

Organizations can address memory safety vulnerabilities by taking proactive measures like:

- Adopting secure coding practices, implementing protections such as bounds-checking, avoiding unsafe functions, and rigorously managing dynamic memory usage.

- Using static and dynamic analysis tools and fuzz testing to detect issues during development.

- Using build-time Software Bill of Materials (SBOMs) to identify vulnerabilities and get transparency into memory safety issues in software.

- Transitioning select components to memory-safe languages using incremental migration plans where feasible.

- Employing runtime protections like runtime application self protection to prevent memory exploitation at runtime.

- Consistently apply security patches for all software and firmware components to address known CVEs quickly.

Bringing About a Memory Safe Future

RunSafe Security is committed to protecting critical infrastructure, and a major key to doing so is eliminating memory-based vulnerabilities in software. Following CISA’s guidance and Secure by Design is an important first step. However, CISA’s guidance to rewrite code into memory safe languages is impractical for companies that produce dozens or hundreds or thousands of embedded software devices, often with 10-30 year lifespans.

This is where RunSafe steps in, offering a far more cost effective and an immediate way to eliminate the exploitation of memory-based attacks. RunSafe Protect mitigates cyber exploits through Load-time Function Randomization (LFR), relocating software functions in memory every time the software is run for a unique memory layout that prevents attackers from exploiting memory-based vulnerabilities. With LFR, RunSafe prevents the exploit of 86 memory safety CWEs.

Rather than waiting years to rewrite code, RunSafe protects embedded systems today, allowing software to defend itself against both known and unknown vulnerabilities.

Interested in understanding your exposure to memory-based CVEs and zero days? You can request a free RunSafe’s Risk Reduction Analysis here.

The post Understanding Memory Safety Vulnerabilities: Top Memory Bugs and How to Address Them appeared first on RunSafe Security.

]]>The post CISA’s 2026 Memory Safety Deadline: What OT Leaders Need to Know Now appeared first on RunSafe Security.

]]>It’s for this reason, among other national security, economic, and public health concerns, that the Cybersecurity and Infrastructure Security Agency (CISA) has made memory safety a key focus of its Secure by Design initiatives.

Now, CISA is urging software manufacturers to publish a memory safety roadmap by January 1, 2026, outlining how they will eliminate memory safety vulnerabilities in code, either by using memory safe languages or implementing hardware capabilities that prevent memory safety vulnerabilities.

Though manufacturers are on the hook for the security of their products, the responsibility doesn’t fall solely on the shoulders of the manufacturers. Buyers of software in the OT sector also have an equally important role to play in addressing memory safety to build the resilience of their mission-critical OT systems against attack.

“The roadmap to memory safety is a great starting point for asset owners to talk to their suppliers, saying this is a big concern of mine, especially for my OT software,” said Joseph M. Saunders, Founder and CEO of RunSafe Security. “Then, what we’re looking for from product manufacturers is that they have a mature process to assess how to achieve memory safety.”

Why Memory Safety Should Be on Your Radar

Why all the fuss about memory safety, and why now? Memory safety vulnerabilities consistently rank among the most dangerous software weaknesses, and they are alarmingly common. Within industrial control systems, memory safety vulnerabilities have been steadily rising, growing from less than 1,000 CVEs in 2014 to nearing 3,000 in 2023 alone.

In one example, programmable logic controllers were found vulnerable to memory corruption flaws that could enable remote code execution. In the OT world, where systems control critical industrial processes, such vulnerabilities aren’t just security risks — they’re potential catastrophes waiting to happen.

Building a Memory Safety Strategy: Collaboration Between OT Software Manufacturers and Buyers Is Needed

CISA has set a clear deadline: January 1, 2026. With this date in mind, OT software manufacturers and buyers can begin to have important conversations about addressing memory safety, both for existing products written in memory-unsafe languages and for new products to be released down the line.

What should be on the agenda for discussion when building and evaluating a memory safety roadmap? Here are four key areas to look at.

1. Vulnerability Assessments

Start with a comprehensive Software Bill of Materials (SBOM) to identify and prioritize memory-based vulnerabilities in OT software. Think of it as a detailed inventory that helps you:

- Identify existing vulnerabilities

- Map your software supply chain

- Pinpoint products most at risk from memory-based vulnerabilities

2. Smart Remediation Planning

Once vulnerabilities are identified, manufacturers should take next steps to eliminate them. OT software buyers can discuss with manufacturers about remediation options like:

- Prioritizing addressing systems with high exposure and potential impact

- Evaluating options for rewriting legacy code in memory-safe languages, like Rust

- Considering proactive solutions such as Load-time Function Randomization (LFR) for existing systems when code rewrites are not practical

3. Future-Proofing Your Products

Software buyers should discuss with their suppliers how they are incorporating memory safety into their product lifecycle planning.

Look ahead by:

- Integrating memory safety into your product roadmap

- Taking advantage of major architectural changes to implement memory-safe languages

- Deploying software memory protection for existing code

4. Building Strong Partnerships

A memory safety roadmap is a great opportunity for software manufacturers and buyers to open up conversations about memory safety and collaborate to find a path forward. When considering working with a supplier, evaluate their willingness to

- Establish regular communication channels

- Transparently track progress

- Demonstrate a shared commitment to security goals

Moving Forward with CISA’s Memory Safety Guidance

By working together, software buyers and manufacturers can not only meet CISA’s memory safety mandate but also build more resilient OT systems.

“All asset owners should do a study with their suppliers to understand the extent to which they are exposed to memory safety vulnerabilities,” Saunders said.

From there, software manufacturers can build a roadmap to tackle the memory safety challenge once and for all.

Learn more about how RunSafe Security protects critical infrastructure and OT systems from memory-based vulnerabilities.

The post CISA’s 2026 Memory Safety Deadline: What OT Leaders Need to Know Now appeared first on RunSafe Security.

]]>The post Don’t Let Volt Typhoon Win: Preventing Attacks During a Future Conflict appeared first on RunSafe Security.

]]>For a deeper discussion on Volt Typhoon’s tactics and the national security stakes, listen to our RunSafe Security Podcast episode featuring experts unpacking the threat to critical infrastructure.

Unlike with ransomware attacks, Volt Typhoon’s aims are focused on the long-term, not the short-term. Ransomware culprits want quick transactions with only enough disruption to their targets as required to extract payments. Nation-state aligned cyber offense, however, has a two-fold purpose: (1) blunt military capabilities and (2) create disruption and overall panic in society.

These actors take a long-term perspective, preparing in advance to be able to achieve both goals when a conflict arises. In the case of the PRC-backed Volt Typhoon, that conflict could be the PRC’s attack on Taiwan. If Volt Typhoon is effective in meeting its goals, it could impact the response of the United States, potentially deterring the US from engaging in the conflict. We are in an age of cyber as a means to achieve geopolitical goals.

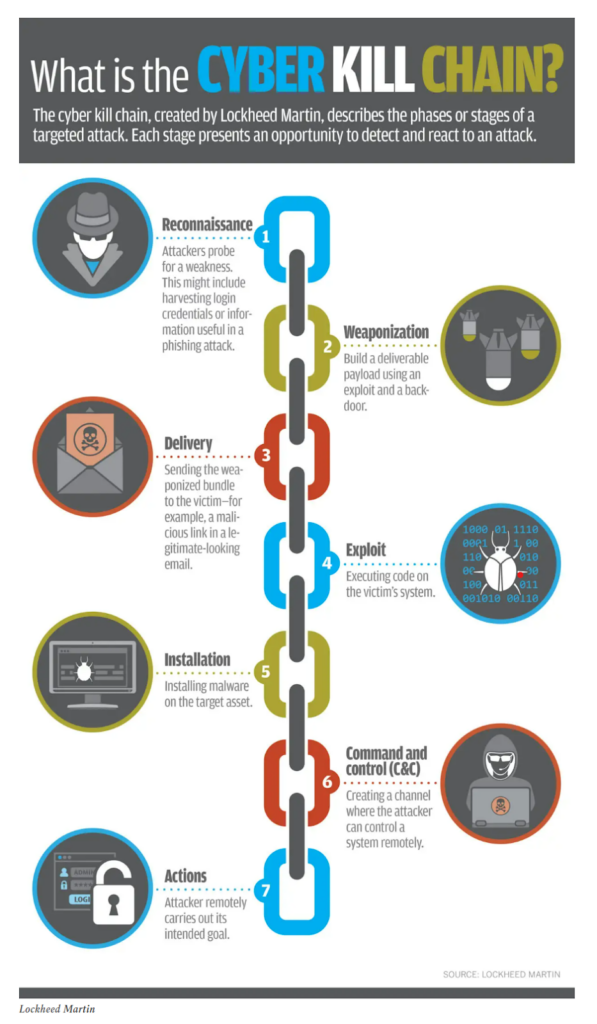

What Is the Cyber Kill Chain? How Nation-State Actors Prepare for Attack

The Kill Chain construct originates in kinetic warfare. For example, in 1990, General John Jumper coined a kill chain for airborne targets: F2T2EA (find, fix, track, target, engage, assess). It is expected to be completed as rapidly as possible. Cyber kill chains, on the other hand, can occur over a much longer period of time.

In the cyber kill chain coined by Lockheed Martin, Reconnaissance is the first phase before Weaponization. The Reconnaissance phase can be prolonged; it is also referred to as the preparation of the battlefield stage. The attacker is in the system, searching for weaknesses, and in some cases living off the land.

Volt Typhoon is in the Reconnaissance stage within critical infrastructure, preparing to move to the next stages of the kill chain if geopolitical circumstances warrant.

What Does a Volt Typhoon Cyber Kill Chain Look Like?

The Department of Homeland Security’s Cybersecurity and Infrastructure Security Agency (CISA) has published detailed descriptions of the typical pattern of attack involving Volt Typhoon. Drawing from a February 2024 CISA notice, a Volt Typhoon cyber kill chain typically involves:

1. Extensive pre-compromise reconnaissance

Surveillance starts by profiling network traffic into and out of a target organization. The goal is to identify:

- Hardware at the edge: By identifying hardware at the edge and the OS versions on the hardware, the attacker can plan the cyber weapons they need for the next stage of the attack. The makes, models, and configurations of routers and other edge devices can often be determined by careful observation of in-bound and out-bound traffic.

- Communications protocols: Communications protocols provide insight into the make, models, and configurations of other devices, further inside a target network.

2. Exploitation of edge devices using known or zero-day vulnerabilities

Compromising the edge devices of a target network is important, because often those edge devices are configured to provide alerts when indicators of compromise occur. By compromising the edge devices themselves (routers, etc), the attacker can “blind” the sensor network. Exploitation will target the operating systems and applications on the edge devices. Once edge devices have been compromised, those devices themselves become part of the surveillance network, but with a privileged view of behavior “inside the network.” Volt Typhoon has used a wide variety of exploitation techniques:

- Memory safety exploitation: In one confirmed compromise, Volt Typhoon actors likely obtained initial access by exploiting CVE-2022-42475 in a network perimeter FortiGate 300D firewall that was not patched. There is evidence of a buffer overflow attack identified within the Secure Sockets Layer (SSL)-VPN crash logs.

- Web-based exploitation: Some edge devices have poorly configured management interfaces, which can expose administrative functions to the Internet.

3. Obtaining administrative credentials using privilege escalation vulnerability

Using the privileged position on edge devices, the attacker tries to determine administrative credentials so that further access to devices will no longer require the exploitation from step 2 above. Utilizing credentials also means that the attacker can maintain persistence on a device, even if the exploitation vector from step 2 is patched.

4. Moving laterally to the domain controller, using the stolen credentials

Compromising the domain controller is a key objective, because it means that all further accesses will have the patina of legitimacy. Identifying fraudulent logins from legitimate user names and passwords requires sophisticated tools that most organizations don’t use.

5. Discovery on victim network, using Living-off-the-Land (LOTL) techniques

With “domain controller” access, the attacker now moves into the stage of executing their mission on target, which might be:

- Exfiltrate data: Often the objective of an attacker is to to steal sensitive data (e.g. medical records, financial information, corporate intellectual property, government classified information). The attacker will be able to patiently find where that sensitive data is located and craft a plan for low-probability of detection exfiltration of that data.

- Inject tools for direct action: This could mean placing assets for ransomware attacks or disruption of system services. Extensive documentation has highlighted the PRC’s commitment to disrupting US critical infrastructure in the event that China attempts to retake Taiwan.

What Can Be Done Today to Prevent Volt Typhoon’s Future Success? Addressing Memory Safety

The attack scenario above highlights that a memory safety vulnerability is part of the Volt Typhoon cyber kill chain. It’s a reasonable approach as: (1) a number of the most dangerous CWEs per MITRE relate to memory safety (6 of the top 25); (2) 70% of Google and Microsoft security patches relate to memory safety as referenced in NSA’s advisory about memory safety; and (3) in VxWorks Urgent/11, 6 of the 11 vulnerabilities related to memory safety.

It is reasonable to hypothesize that Volt Typhoon cyber kill chains also target OT/ICS devices, which are attractive as both edge devices (step 2 above) and as controllers of critical infrastructure. Capture of these devices enables Volt Typhoon to directly disrupt critical infrastructure by sending malicious commands and/or erroneous data to these devices. Additionally, these OT/ICS devices are often good pathways into the IT infrastructure.

In the case of an attack on Taiwan, Volt Typhoon could deter the US from taking action by exploiting a memory-based vulnerability, for example, to disrupt a major power grid.

Approaches do exist for memory safety now, including the hardening of software in OT/ICS devices to withstand attacks against both known and unknown memory-based vulnerabilities. Unlike static defenses that can be easily bypassed, techniques like moving target defense randomize at a granular level the memory layout of software binaries. By constantly shifting the software landscape, attackers are unable to predictably exploit memory vulnerabilities.

For example, RunSafe Security’s Protect solution mitigates cyber exploits by dynamically relocating software functions in memory every time the software is loaded. By ensuring that the memory layout changes every time software is loaded, RunSafe effectively thwarts repeated attack attempts and prevents bad actors, like Volt Typhoon, from compromising critical infrastructure.

Call to Action for Critical Infrastructure Owners

As outlined, there is time to foil Volt Typhoon’s Exploit stage and their ultimate goal of impacting the United States response if a conflict arises. Only critical infrastructure owners can close the gap—by demanding Secure by Design systems and insisting on runtime protection against memory-based exploits.

Unlike CISA, which provides guidance and recommendations, critical infrastructure owners purchase and update ICS/OT devices installed in their plants, grids, and factories. It falls in their hands to require, demand, and pound the table for ICS/OT device manufacturers, who are their vendors, to increase the cyber resilience of embedded devices. These devices must have the ability to operate through attacks in real-time without the need for human, centralized monitoring and response.

By demanding memory safety and other defense measures from their ICS/OT device vendors now, critical infrastructure owners can prevent the success of Volt Typhoon in the future.

Want to hear from RunSafe leaders on how to approach this challenge? Tune in to the RunSafe Security Podcast episode on Volt Typhoon to explore real-world implications and actionable strategies.

Learn more about RunSafe Security’s Protect solution in our technical deep dive.

The post Don’t Let Volt Typhoon Win: Preventing Attacks During a Future Conflict appeared first on RunSafe Security.

]]>The post Secure by Design: Building a Safer Future Through Memory Safety appeared first on RunSafe Security.

]]>

The software industry faces a persistent challenge: how to address memory safety vulnerabilities, one of the most common and exploited flaws in modern systems. Memory safety vulnerabilities expose systems to remote control, data breaches, and disruptions, often with devastating consequences. In response, Secure by Design principles offer a forward-thinking solution to the challenge by embedding security into the foundation of software development.

In a recent webinar hosted by RunSafe Security, industry experts Doug Britton, EVP and Chief Strategy Officer at RunSafe, and Shane Fry, Chief Technology Officer at RunSafe, provided insights into the critical role that Secure by Design can play in mitigating these vulnerabilities.

Here are the key takeaways from their discussion and how RunSafe’s approach supports a proactive cybersecurity model.

Access the full webinar discussion on memory safety here.

Memory Safety: A Persistent Threat

Memory safety vulnerabilities are among the oldest and most widespread issues in software development. Doug Britton emphasized how these vulnerabilities, despite being known for decades, persist in many codebases and continue to be exploited by attackers.

“The classic memory safety issues are among the oldest and most insidious in software,” Doug explained. “We’ve known about them for decades, but they persist, and attackers continue to exploit them.”

According to Jack Cable, senior technical advisor at CISA, two-thirds of vulnerabilities in compiled code are related to memory safety. Attackers frequently weaponize these flaws, leading to widespread breaches and disruptions. Even advanced testing tools and compilers often fail to detect all vulnerabilities, leaving organizations exposed. These vulnerabilities include buffer overflows, which Doug and Shane referenced as a common point of exploitation.

What Is Secure by Design?

Secure by Design is an approach to software development that prioritizes security from the very beginning. Rather than bolting on security measures after the fact, Secure by Design integrates security into every stage of the development lifecycle. The method aims to reduce the likelihood of vulnerabilities by making security an inherent part of the system architecture.

In the case of memory safety, this often involves transitioning to memory-safe languages like Rust and Go, which can drastically reduce the risk of memory-related bugs. However, as Doug noted, transitioning entire codebases to these languages is not a quick fix. “Most vendors we’ve spoken to have said it’s going to take them at least eight years to get a significant portion of their code running in memory-safe languages,” he shared.

This timeline introduces a significant challenge: organizations cannot afford to wait for years while attackers continue to exploit existing vulnerabilities. Immediate solutions are needed to bridge the gap.

RunSafe’s Contribution to Secure by Design

RunSafe Security provides a practical, scalable solution to address memory safety vulnerabilities while organizations work toward the long-term goal of transitioning to memory-safe languages. Instead of requiring developers to rewrite their entire codebase, RunSafe’s technology mitigates vulnerabilities without changing a single line of code.

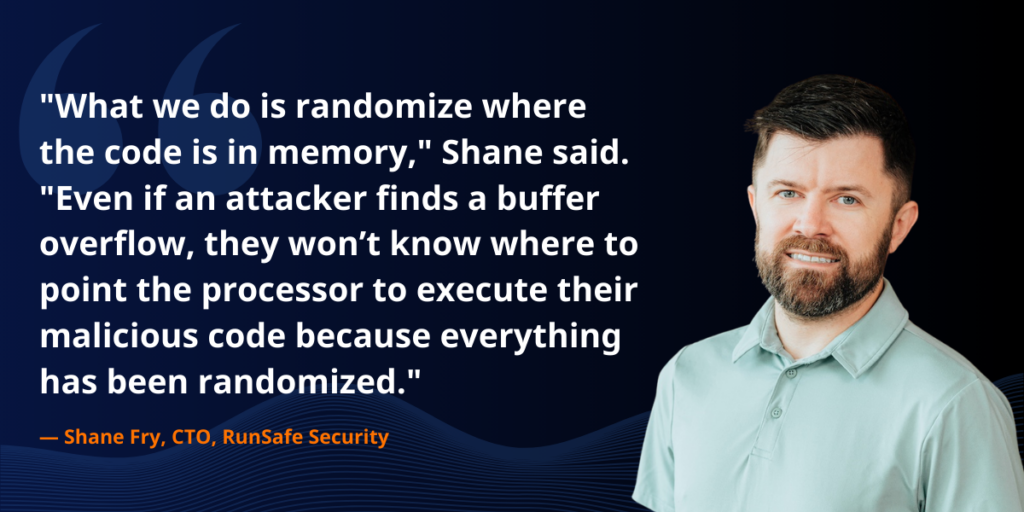

As Shane Fry explained, RunSafe’s approach focuses on randomizing code layouts in memory. This method prevents attackers from predicting where code is located, making it significantly harder to exploit memory vulnerabilities such as buffer overflows.

“What we do is randomize where the code is in memory,” Shane said. “Even if an attacker finds a buffer overflow, they won’t know where to point the processor to execute their malicious code because everything has been randomized.”

This process, known as Moving Target Defense, effectively protects against both known and unknown vulnerabilities at runtime. RunSafe’s solution can be integrated in as little as 30 minutes, making it accessible for organizations of all sizes without disrupting development workflows.

Challenges in Adopting Secure by Design

Despite its clear benefits, adopting Secure by Design principles is not without its challenges. One of the most significant barriers is the transition to memory-safe languages. As Doug and Shane discussed, this process is resource-intensive and requires specialized expertise, which many organizations do not yet possess.

“To implement Rust or Go, developers need to learn these languages, and that takes time,” Doug pointed out. “It’s not as simple as downloading a module and instantly knowing Rust. It’s going to take an equivalent amount of time and effort as it took to build the original C and C++ codebases.”

Additionally, there are significant economic barriers to rewriting code, especially in industries reliant on legacy systems. Shane highlighted that while Secure by Design offers a path toward a safer future, organizations need interim solutions to protect themselves in the present.

This is where RunSafe’s solution becomes invaluable. By randomizing code layout, organizations can protect their existing systems while planning for the long-term transition to memory-safe languages. This hybrid approach allows companies to mitigate immediate risks without requiring massive investments in rewriting code from scratch.

The Benefits of Secure by Design

While Secure by Design requires upfront investment, its long-term benefits make it a critical strategy for organizations looking to build more secure and resilient systems.

Shane emphasized that acting now is crucial, as attackers won’t wait for organizations to fully transition to memory-safe languages. “The bugs that exist today are going to be exploited tomorrow,” he warned. “It’s essential that we take immediate steps to protect our systems.”

Key advantages include:

- Reduced Vulnerabilities: By embedding security into the development process, Secure by Design helps eliminate vulnerabilities before they become exploitable, reducing the need for costly patches later.

- Lower Costs: Addressing security during the design phase can significantly reduce the need for emergency updates, saving organizations time and money.

- Improved Developer Efficiency: With security integrated from the start, developers can focus on innovation rather than constantly fixing vulnerabilities post-launch.

- Increased Customer Trust: Organizations that prioritize Secure by Design demonstrate a commitment to safety, which builds trust among customers, partners, and stakeholders.

- Future-Proofing: Secure by Design helps organizations stay ahead of emerging threats, ensuring that their systems are prepared to withstand future cyberattacks.

Building a Resilient Future

Secure by Design principles offer a clear path forward for organizations looking to protect themselves from memory safety vulnerabilities. RunSafe Security provides a critical solution that helps companies bridge the gap between today’s risks and the long-term goal of transitioning to memory-safe languages.

By embracing Secure by Design and leveraging RunSafe’s technology, organizations can build more resilient systems, reduce their exposure to vulnerabilities, and protect their operations from the ever-present threat of cyberattacks.

For a deeper dive into Secure by Design strategies and memory safety solutions, download our full webinar and learn from industry experts Doug Britton and Shane Fry how to protect your codebase and future-proof your organization’s cybersecurity strategy.

The post Secure by Design: Building a Safer Future Through Memory Safety appeared first on RunSafe Security.

]]>The post The Real Cost of Rewriting Code for Memory Safety – Is There a Better Way? appeared first on RunSafe Security.

]]>The Hidden Costs of Rewriting Code

The Memory Safety Crisis: A Growing Concern

Innovative Approaches to Memory Safety

Addressing Memory Safety: A Comprehensive Approach

The Real Cost of Rewriting Code for Memory Safety – Is There a Better Way?

Introduction

Memory safety vulnerabilities are a persistent and pervasive issue in the software development world, leading to some of the most severe and costly security breaches. From buffer overflows to dangling pointers, these vulnerabilities are a common attack vector, exploited by malicious actors to gain unauthorized access, cause data corruption, or crash systems. Traditionally, the go-to solution has been to rewrite the affected codebase to ensure memory safety, which is not feasible. However, this approach is fraught with challenges, including substantial time investment, high costs, and the inherent complexity of reworking existing systems without introducing new bugs.

Rewriting code for memory safety is akin to renovating an old house; it’s labor-intensive, expensive, and often reveals unforeseen problems that further complicate the project. For developers and IT security professionals, the idea of re-engineering vast amounts of legacy code for hundreds of different types of products and often millions of fielded devices is daunting, often leading to project delays and increased pressure on already stretched resources. The technology leaders and system architects face the additional burden of justifying these costs and disruptions to stakeholders.

But what if there were a better way?

Advances in technology now offer innovative solutions that enhance memory safety without the need for extensive code rewrites. These cutting-edge approaches mitigate risks while saving time and resources, enabling product manufacturers to secure their systems more efficiently and effectively. Read on to discover these groundbreaking methods and explore how they can transform the landscape of software security.

The Hidden Costs of Rewriting Code

Rewriting code for memory safety is usually a monumental endeavor that consumes significant time and resources. For software developers and engineers, the process involves learning new programming languages, testing open source components that may not be compatible, hiring new developers with different skills, testing all over again, and then getting your customers to buy new versions of the device they just purchased. This meticulous task demands a high level of expertise and substantial man-hours, diverting valuable resources from other critical projects.

Moreover, the process of rewriting code can inadvertently introduce new bugs and vulnerabilities. As developers modify and restructure the code, there is always the risk of human error, leading to new security flaws that could be even more challenging to detect and rectify. This not only undermines the initial objective of enhancing security, but can also add additional rounds of testing and debugging, further stretching timelines and budgets.

The disruption caused by rewriting code extends beyond the development team. Existing workflows are interrupted, scarce resources that could be focused on new features are deployed to redoing existing features, leading to delays in project timelines and deviations from carefully planned product roadmaps. For technology leaders and system architects, this upheaval can create significant strategic challenges, as they must balance the urgent need for security with the equally pressing demands of innovation and market competitiveness.

In light of these hidden costs, it becomes evident that the traditional approach to memory safety is far from ideal. Product owners and development teams need solutions that address security vulnerabilities without derailing their operations and straining their resources.

The Memory Safety Crisis: A Growing Concern

Memory safety vulnerabilities have become a pressing issue in today’s digital landscape, with far-reaching consequences. According to a recent study by Microsoft, nearly 70% of all software vulnerabilities stem from memory safety issues, underscoring the critical nature of this threat. High-profile breaches, such as the Heartbleed bug and the WannaCry ransomware attack, highlight the devastating impact these vulnerabilities can have. These incidents not only compromised sensitive data but also caused billions of dollars in damages and disrupted services globally.

As software systems grow increasingly complex, maintaining memory safety becomes more challenging. Modern applications often integrate numerous third-party libraries and dependencies, each with its own potential vulnerabilities. This complexity amplifies the difficulty of ensuring that every component adheres to stringent memory safety standards. For software developers and security professionals, the task of safeguarding these intricate systems is akin to finding a needle in a haystack, requiring continuous vigilance and comprehensive testing.

Given the escalating scale and sophistication of these threats, the need for proactive and effective solutions is more urgent than ever. Traditional methods like rewriting code are no longer sufficient. Product manufacturers must adopt innovative strategies that can address memory safety vulnerabilities swiftly and efficiently, without compromising their operational capabilities.

Innovative Approaches to Memory Safety

New approaches to memory safety are transforming the way product manufacturers address vulnerabilities. Advanced software hardening techniques have emerged as a game-changer, providing robust security enhancements without disrupting existing workflows. These methods integrate seamlessly with current software development processes, ensuring that memory safety is maintained without compromising operational efficiency.

Key features of these advanced approaches include real-time monitoring and threat detection, which continuously scan applications for suspicious activity. Automated response and recovery mechanisms further bolster security by swiftly neutralizing threats and restoring systems to a safe state, minimizing downtime and mitigating the impact of attacks.

Moreover, these innovative solutions are designed to be compatible with a wide range of software environments, ensuring that they can be deployed across diverse platforms and applications. This flexibility makes it easier to adopt these techniques without extensive modifications to existing infrastructure. For product managers and security leaders, the benefits are clear: enhanced security, reduced risk of breaches, and a more resilient software ecosystem, all achieved without the significant resource investment typically associated with code rewrites.

Addressing Memory Safety: A Comprehensive Approach

Addressing memory safety requires a comprehensive and multifaceted approach that goes beyond implementing innovative technologies. While advanced software hardening techniques are crucial, their effectiveness is amplified when combined with best practices and robust organizational policies. This holistic strategy ensures that every aspect of the software development lifecycle prioritizes memory safety, creating a resilient and secure foundation.

Collaboration is key to achieving this goal. Developers, security teams, and stakeholders must work together to identify vulnerabilities, develop mitigation strategies, and implement effective solutions. By fostering open communication and collaboration, product teams can ensure that memory safety is not just a technical issue but a shared responsibility. This unified effort helps to align priorities, streamline processes, and ensure that security considerations are integrated into every phase of development.

Continuous training and awareness are also vital components of a comprehensive memory safety strategy. Regular training sessions and workshops help keep teams updated on the latest threats, best practices, and technological advancements. Encouraging a culture of knowledge-sharing and continuous learning ensures that everyone, from junior developers to senior architects, remains vigilant and informed.

By combining innovative solutions with collaborative efforts and ongoing education, product manufacturers can build a robust defense against memory safety vulnerabilities. This comprehensive approach not only enhances security but also promotes a culture of secure software development, ensuring long-term protection and resilience.

To achieve memory safety and calculate your potential attack surface reduction, consider implementing software memory protections without rewriting a single line of code. Imagine how much your CFO will appreciate the efficiency and cost savings of this proactive security measure. Start enhancing your software’s defense today!

The post The Real Cost of Rewriting Code for Memory Safety – Is There a Better Way? appeared first on RunSafe Security.

]]>The post The Memory Safety Crisis: Understanding the Risks in Embedded Software appeared first on RunSafe Security.

]]>Risks of Memory Vulnerabilities in Embedded Software

Challenges of Addressing Memory Safety

RunSafe’s Innovative Approach to Memory Safety

Software Supply Chain Security with RunSafe

Ensuring Security in Embedded Systems, ICS, and OT

Practical and Cost-Effective Memory-Based

Vulnerability Protection

The Memory Safety Crisis: Understanding the Risks in Embedded Software

Introduction to Memory Safety

Memory safety is a foundational aspect of software development, ensuring that programs operate reliably and securely without accessing or manipulating memory incorrectly. In embedded systems, where software controls critical functions such as transportation systems or power grids, the importance of memory safety cannot be overstated.

The National Security Agency (NSA) has issued guidance emphasizing the severity of such vulnerabilities, prompting major tech companies like Google and Microsoft to underscore their prevalence. Likewise, the Cybersecurity and Infrastructure Security Agency (CISA) has issued an implementation plan to fortify and defend the digital landscape.

This blog post delves into the risks posed by memory vulnerabilities in embedded software, the challenges in addressing them, and how embedded software security solutions like RunSafe Security can enhance memory safety without extensive code rewrites or performance degradation.

Risks of Memory-Based Vulnerabilities in Embedded Software

Memory safety in embedded software is not just a concern, it’s a substantial and pressing threat to software deployed within critical infrastructure. This concern is further amplified by the NSA’s recent guidance in November 2022, which underlines the gravity of the risk posed by memory-based vulnerabilities. These vulnerabilities have the potential to compromise the integrity and security of essential systems, a risk that cannot be ignored.

A similar analysis from MITRE reveals a sobering reality: three of the top eight most dangerous software weaknesses are memory safety issues. Google and Microsoft echo these concerns, reporting that nearly 70% of their vulnerabilities in native code stem from memory-based flaws.

The NSA’s recommendation, a fast transition to memory-safe alternatives like Go, Java, Ruby, Rust, and Swift, highlights the situation’s urgency. However, the monumental task of rewriting code for memory safety means touching billions of lines of code across countless code bases and products. This presents significant challenges to any organization, both in terms of financial investment and opportunity cost.

Challenges of Addressing Memory Safety

Traditionally, the recommended approach to addressing memory safety in embedded software has been rewriting code in languages like Rust, which is known for its memory safety features. However, rewriting billions of lines of code across numerous code bases and products entails significant costs and time investments. Moreover, this may disrupt existing workflows and introduce unnecessary complexities into development processes.

RunSafe’s Innovative Approach to Memory Safety

RunSafe Security presents a pioneering solution in contrast to conventional methods, providing organizations with the capability to attain memory safety without requiring extensive code alterations or sacrificing performance. Utilizing its cutting-edge technology, RunSafe employs a method of hardening code by randomizing the placement of functions in memory, ensuring a unique memory layout for each binary during runtime.

By embedding protective measures directly into the software during the build process, RunSafe effectively addresses memory-based vulnerabilities while maintaining system performance. This approach offers a practical and economically viable alternative to traditional security measures, mitigating the risk of exploitation without imposing significant overhead on operations.

Moreover, RunSafe seamlessly integrates with continuous integration and continuous delivery (CI/CD) pipelines, streamlining the incorporation of enhanced security measures into the software supply chain. This integration ensures that developers can maintain their productivity while simultaneously fortifying the security of their applications, significantly improving the resilience of deployed software against potential threats.

Software Supply Chain Security with RunSafe