The post The Top 6 Risks of AI-Generated Code in Embedded Systems appeared first on RunSafe Security.

]]>RunSafe Security’s 2025 AI in Embedded Systems Report, based on a survey of over 200 professionals throughout the US, UK, and Germany working on embedded systems in critical infrastructure, captures the scale of this shift: 80.5% use AI in development, and 83.5% already have AI-generated code running in production.

As AI-generated code becomes a permanent part of embedded software pipelines, embedded systems teams need a clear view of where vulnerabilities are most likely to emerge.

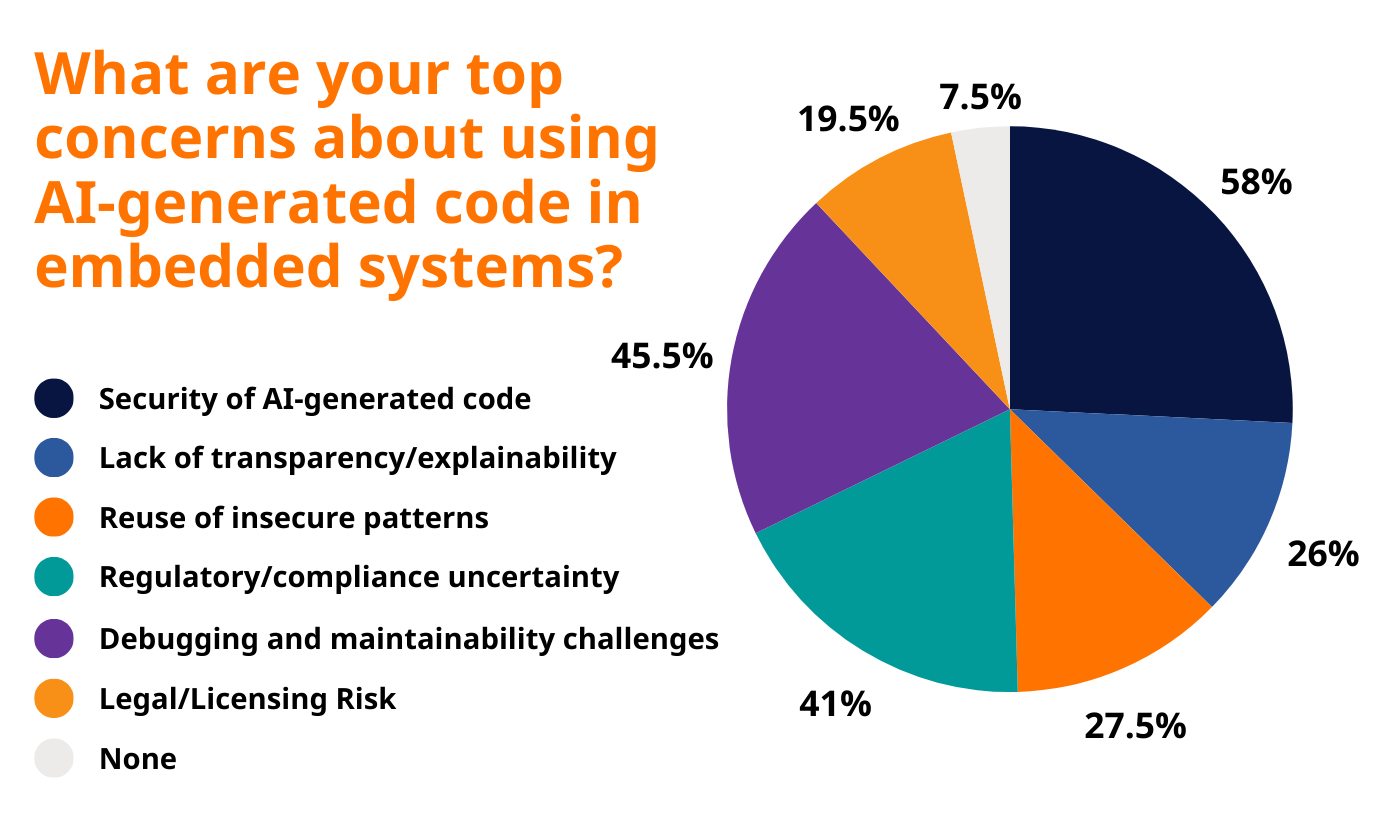

According to respondents, six risks stand out as the most urgent for embedded security in 2025.

Listen to the Audio Overview

The 6 Most Critical Risks of AI-Generated Code

1. Security of AI-Generated Code (53% of respondents)

Security vulnerabilities in AI-generated code remain the single most significant concern among embedded systems professionals. More than half of survey respondents identified this as their primary worry.

AI models are trained on vast repositories of existing code, much of which contains security flaws. When AI generates code, it can replicate and scale these vulnerabilities across multiple systems simultaneously. Unlike human developers, who might introduce a single bug in a single module, AI can reproduce the same flawed pattern across entire codebases.

The challenge is particularly acute in embedded systems, where code often runs on safety-critical devices with limited ability to patch after deployment. A vulnerability in an industrial control system or medical device can persist for years, creating long-term exposure. Traditional code review processes, designed to catch human errors at human speed, struggle to keep pace with the volume and velocity of AI-generated code.

2. Debugging and Maintainability Challenges (45.5% of respondents)

Nearly half of embedded systems professionals worry about the difficulty of debugging and maintaining AI-generated code. When a human engineer writes code, they understand the logic and intent behind every decision. When AI generates code, that understanding often doesn’t transfer.

When bugs appear, tracing their root cause becomes significantly harder. Engineers must reverse-engineer the AI’s logic rather than reviewing their own design decisions. When modifications are needed—whether for new features or security patches—developers must understand code they didn’t write and may struggle to modify without introducing new issues.

In embedded systems, where code often has a lifespan measured in decades, maintainability is critical. If the original code is AI-generated and poorly documented, each maintenance cycle becomes progressively more difficult and error-prone.

3. Regulatory and Compliance Uncertainty (41% of respondents)

41% of survey respondents flagged regulatory and compliance uncertainty as a major concern with AI-generated code. Yet the certification processes organizations rely on today weren’t designed to account for AI-generated code. In regulated industries such as medical devices or aerospace, obtaining code certification requires extensive documentation of design decisions and validation procedures. When AI generates the code, much of that documentation doesn’t exist in traditional forms.

This creates several challenges. Organizations must make their own determinations about what constitutes adequate validation for AI-generated code, with limited regulatory guidance. When incidents occur, the question of liability becomes murky: Who is responsible when AI-generated code fails?

4. Reuse of Insecure Patterns (27.5% of respondents)

AI models learn from existing code, and that code is often flawed. More than a quarter of embedded systems professionals worry that AI tools will perpetuate and scale insecure coding patterns across their systems.

This risk is particularly concerning because it’s systemic rather than isolated. If an AI model is trained on C/C++ code that commonly contains memory safety issues—and C/C++ historically dominates embedded systems—the AI will likely generate code with similar vulnerabilities.

This risk isn’t just theoretical. Memory safety vulnerabilities such as buffer overflows and use-after-free errors account for roughly 60-70% of all security vulnerabilities in embedded software. If AI tools perpetuate these patterns at scale, the industry could see a multiplication of one of its most persistent and exploitable vulnerability classes.

5. Lack of Transparency and Explainability (26% of respondents)

AI-generated code often functions as a black box. The code works, but understanding why it works or why it fails can be extraordinarily difficult. 26% of embedded systems professionals cite this lack of transparency as a significant concern.

In embedded systems, where reliability and safety are paramount, this opacity creates serious problems. Engineers need to understand not just what the code does, but how it handles edge cases, error conditions, and unexpected inputs. With AI-generated code, that understanding is often incomplete or absent.

The survey reveals additional concern about what happens when AI-generated code becomes increasingly bespoke. Historically, when developers used shared libraries, a vulnerability discovered in one place could be patched across an entire ecosystem. If AI generates unique implementations for each deployment, this shared vulnerability intelligence fragments, making collective defense more difficult.

6. Legal and Licensing Risks (19.5% of respondents)

Nearly one in five embedded systems professionals identifies legal and licensing risks as a concern with AI-generated code. AI models are trained on vast amounts of code, much of it open source with specific licensing requirements. When AI generates code, questions arise: Does the output constitute a derivative work? Who owns the copyright to AI-generated code?

These questions remain largely unresolved, and different jurisdictions may answer them differently. For embedded systems manufacturers, this creates software supply chain risk. If AI-generated code inadvertently reproduces proprietary algorithms or patented methods from its training data, manufacturers could face infringement claims.

For organizations in regulated industries or those serving government customers, these legal uncertainties can be deal-breakers. Defense contractors, for example, must provide clear provenance and licensing information for all software components.

Why These Risks Matter for Embedded Systems Teams

The 2025 landscape reveals an industry at a critical juncture. AI has fundamentally changed how embedded software is developed, and that transformation is accelerating. 93.5% of survey respondents expect their use of AI-generated code to increase over the next two years.

But this acceleration is happening faster than security practices have evolved. The tools and processes that worked for human-written code at human speed aren’t designed for AI-generated patterns at machine velocity.

The good news is that awareness is high and investment is following: 91% of organizations plan to increase their embedded software security spend over the next two years.

Understanding these six critical risks provides a roadmap for where design decisions, security investments, and process changes will have the most significant impact. Organizations that address these risks proactively—through better tooling, enhanced testing, runtime protections, and clearer governance—will not only strengthen their systems but also position themselves as industry leaders.

The insights in this post are based on RunSafe Security’s 2025 AI in Embedded Systems Report, a survey of embedded systems professionals across critical infrastructure sectors.

Explore the full report to see the data, trends, and strategic guidance shaping the future of secure embedded systems development.

The post The Top 6 Risks of AI-Generated Code in Embedded Systems appeared first on RunSafe Security.

]]>The post Beyond the Battlefield: How Generative AI Is Transforming Defense Readiness appeared first on RunSafe Security.

]]>In a recent episode of Exploited: The Cyber Truth, RunSafe Security CEO Joseph M. Saunders and Ask Sage’s Arthur Reyenger joined host Paul Ducklin to discuss how AI is transforming mission readiness. But instead of focusing on sci-fi scenarios, their conversation looked at the ways AI is supporting behind the scenes.

Generative AI Is Solving an Age-Old Defense Problem: Time

For decades, defense teams have struggled under the weight of processes, documentation, testing requirements, and the sheer volume of data needed to support modern missions. Whether you’re analyzing electromagnetic spectrum threats, vetting new technology, or validating weapons systems, the bottleneck is almost always the same: time.

That’s exactly where generative AI is already having an outsized impact.

Organizations are using AI to speed up tasks that previously slowed entire programs—think requirements gathering, testing cycles, red-team scenario planning, and acquisition paperwork. Reyenger described one real-world deployment where a combat command used generative AI to evaluate new technologies faster:

“We saved them 95% of the time and the cost to be able to go through those processes.”

That kind of acceleration doesn’t just make workflows cleaner—it moves capability into the field when warfighters actually need it.

The Real AI Revolution Is Happening in Engineering, Logistics, and Maintenance

If there’s a misconception about AI in defense, it’s that its greatest value lies in autonomous weapons. In reality, AI is transforming less glamorous, but mission-critical areas like code development and sustainment.

Saunders emphasized that AI is already reshaping how embedded systems and defense software are built. Instead of teams getting buried in boilerplate code, AI handles the repeatable pieces, letting engineers focus on architecture, performance, and security. The result is faster innovation and more secure systems.

Another example comes straight from the U.S. Navy. Ships equipped with 3D printers previously had to request schematics and documentation from shore through slow, satellite-connected networks. Now, generative AI models running locally can help crews identify the right parts, understand dependencies, and produce what they need instantly, even while offline.

This is the kind of operational lift that rarely makes headlines but changes everything. Missions recover faster. Readiness improves. Warfighters stay effective in environments where bandwidth, connectivity, and time are scarce.

A Future of Responsible, Secure, Human-Centric AI

As the Department of Defense continues to adopt AI, one principle remains non-negotiable: humans stay in the loop. The most powerful applications of generative AI are the ones reducing cognitive load so people can make better decisions.

Reyenger captured this well when discussing how AI fits into modern workflows:

“Technology should not be dictating the way that organizations define their workflows. It should be supporting them. If you’re doing it a certain way, it was because it was right at a time.”

This mindset also extends to the cybersecurity and model-security challenges surrounding AI. Ask Sage’s “fire-and-forget” architecture, for example, ensures sensitive data doesn’t persist inside models—an essential requirement for defense environments where security, privacy, and zero-trust principles are table stakes.

As Saunders emphasized in the episode, the goal isn’t just choosing the best foundation model today. It ensures defense teams aren’t locked into a single vendor or platform, and that AI remains flexible enough to evolve with the mission.

Final Thought: AI Isn’t Making Warfighters More Robotic — It’s Making Them More Human

The more generative AI takes on repetitive work—documentation, analysis, testing, search, troubleshooting—the more time experts can spend on creativity, strategy, and judgment. And that’s where warfighters deliver their greatest value.

AI’s impact in defense isn’t about the machines. It’s about freeing people to think, decide, innovate, and act faster and with more confidence.

Listen to the full episode here.

The post Beyond the Battlefield: How Generative AI Is Transforming Defense Readiness appeared first on RunSafe Security.

]]>The post Meeting ICS Cybersecurity Standards With RunSafe appeared first on RunSafe Security.

]]>As software supply chains grow in complexity and ICS devices take on more digital functionality, operators face risk from vulnerabilities buried deep within firmware, dependencies, and proprietary code. Strengthening security and demonstrating compliance begins with improving the integrity, transparency, and resilience of that software.

RunSafe helps industrial organizations achieve this by hardening code against exploitation, increasing visibility into software components through build-time Software Bill of Materials (SBOM) generation, and extending protection to systems that can’t easily be patched or rebuilt.

These capabilities align directly with the technical controls required across major ICS cybersecurity standards, helping operators close gaps in their security posture.

Listen to the Audio Overview

Key ICS Cybersecurity Standards RunSafe Supports

| ICS Standard / Regulation | Relevant Requirements | RunSafe Capability That Supports It |

|---|---|---|

| IEC 62443 (including SR 3.4: Software & Information Integrity) | Software integrity, tamper prevention, secure component management | Protect: Runtime exploit prevention stops unauthorized code execution even when vulnerabilities exist. Identify: Build-time SBOMs document components for integrity verification. |

| NIST 800-82 (Guide to ICS Security) | System integrity (SI), configuration management (CM), continuous monitoring (RA/CA), incident response | Identify: SBOMs support configuration management and vulnerability assessment. Protect: Runtime exploit mitigation enhances system integrity. Monitor: Crash analytics & exploit detection support continuous monitoring. |

| NIST Risk Management Framework (RMF) | Ongoing assessment, vulnerability management, security controls validation | Identify: SBOMs accelerate risk assessment and control verification. Monitor: Evidence and telemetry support ongoing authorization and assessment. |

| NERC CIP | Software integrity, vulnerability assessments, incident reporting, BES Cyber System security | Identify: SBOMs shorten vulnerability assessment cycles. Protect: Hardens embedded systems to maintain operational integrity. Monitor: Provides supporting data for CIP-008 incident response. |

| EU Cyber Resilience Act (CRA) | Mandatory SBOMs, secure-by-design software, vulnerability handling, lifecycle security | Identify: Build-time SBOM generation identifying all components, including for C/C++ builds. Protect: Code hardening reduces exploitability for both known and unknown vulnerabilities. |

| U.S. Federal SBOM Mandates (NTIA, DHS, DoD, FDA) | Accurate, complete, machine-readable SBOMs; traceability; vulnerability identification | Identify: Comprehensive CycloneDX SBOMs generated at build-time that support all mandatory NTIA fields. |

| UK Cybersecurity and Resilience Bill | Supply chain assurance, software integrity, rapid incident reporting | Identify: SBOMs enable supply chain verification and vulnerability tracking. Protect: Code hardening reduces exploitability for both known and unknown vulnerabilities. |

| ISA/IEC 62443-4-1 (Secure Development Lifecycle) | Component inventory, secure build processes, threat mitigation | Identify: SBOM visibility integrated into SDLC and build processes. Protect: Mitigates memory-based vulnerabilities for devices in the field even before patches are available. |

IEC 62443 Security Requirements

IEC 62443 defines security levels (SL-1 to SL-4) to counter cyber threats to ICS systems. Security Requirement 3.4 requires mechanisms to ensure software and information integrity by detecting and preventing unauthorized modifications, which is essential for defending against zero-day exploits.

RunSafe Security supports this with runtime code protection and automated defenses that maintain software trustworthiness in ICS devices, aligning with these IEC 62443 integrity requirements.

NIST 800-82 Control Families

NIST SP 800-82 is a specialized guidance document focused on Industrial Control Systems (ICS) and Operational Technology (OT) environments. It defines 19 control families tailored to these unique contexts, addressing operational, technical, and management controls relevant to ICS security.

RunSafe’s Protect solution assists in meeting NIST standards by hardening software across firmware, applications, and operating systems to reduce vulnerabilities, especially memory-based and zero-day threats. This aligns with minimizing risks outlined in NIST 800-82, such as unauthorized modifications, malware infections, and system exploitation.

NERC CIP standards

NERC CIP applies to bulk electric systems and mandates stringent access control, security monitoring, and incident response to protect critical grid infrastructure.

RunSafe’s automated software hardening strengthens embedded software against vulnerabilities, including zero-day attacks, helping to meet NERC CIP mandates for cybersecurity system management and reducing the attack surface of BES Cyber Systems.

EU Cyber Resilience Act

The EU Cyber Resilience Act imposes mandatory cybersecurity requirements on manufacturers placing products with digital elements into the European market. The regulation requires comprehensive SBOM documentation, vulnerability disclosure processes, and Security by Design principles throughout the product lifecycle.

RunSafe empowers organizations to meet EU CRA requirements through automated build-time SBOM generation, embedded software hardening, and proactive vulnerability identification.

UK’s Cybersecurity and Resilience Bill

The UK’s proposed legislation extends cybersecurity obligations across critical national infrastructure sectors. The bill emphasizes supply chain security and mandates incident reporting within strict timeframes, creating accountability for operators and vendors.

RunSafe Security supports compliance with the UK Cybersecurity and Resilience Bill by providing embedded software security designed specifically for ICS systems and software supply chain transparency through build-time SBOM generation.

How RunSafe Hardens Code & Strengthens the Software Supply Chain to Meet ICS Security Standards

RunSafe improves ICS security posture by providing:

- Build-Time SBOM Generation: Provides complete visibility into software components and software supply chain risk, especially for C/C++ and embedded toolchains.

- Runtime Code Protection: Protects ICS devices in the field, even before patches are available, by preventing the exploitation of memory-based vulnerabilities, including zero-day exploits.

Together, these capabilities directly support key ICS cybersecurity requirements.

The Industrial Control System Software Risk Landscape

ICS cybersecurity risks increasingly stem from software complexity. PLCs, HMIs, sensors, gateways, and controllers rely on layered stacks of compiled code, RTOS kernels, communication libraries, protocol implementations, and third-party components. As this software ecosystem expands, several categories of risk emerge:

1. Vulnerabilities in Proprietary and Third-Party Components

Industrial devices often incorporate dozens or hundreds of software elements, both internally developed and externally sourced. Many of these components lack update mechanisms or clear lifecycle management. When vulnerabilities are disclosed, asset owners frequently lack the visibility needed to determine whether their systems are exposed.

2. Memory Safety Issues as a Persistent ICS Threat

Memory safety remains one of the most common contributors to ICS vulnerabilities. Buffer overflows, use-after-free flaws, and out-of-bounds writes still account for a significant portion of CVEs in industrial and embedded software. These weaknesses persist in critical infrastructure because:

- Many devices use older programming languages (e.g., C/C++)

- Patching may be infeasible due to uptime requirements

- Legacy firmware often cannot be rebuilt or re-verified

- Third-party components introduce memory safety risks through the software supply chain.

Andy Kling, VP of Cybersecurity at Schneider Electric, a major player in the ICS/OT space, found that “memory safety was easily the largest percentage of recorded security issues that we had.” 94% of these weaknesses come from third-party components.

While memory safety is not the only category of ICS risk, it remains one of the most damaging, often enabling remote code execution or multi-stage exploit chains.

3. Software Supply Chain Blind Spots

Software supply chain cyberattacks frequently target the software dependencies and build environments behind industrial products. Without reliable SBOMs, operators cannot:

- Determine which libraries exist in a given binary

- Rapidly assess exposure to new vulnerabilities

- Confidently evaluate vendor-supplied code or firmware

The lack of software transparency turns compliance into guesswork and slows incident response.

4. Operational Constraints That Block Traditional Security Measures

Industrial environments face major deployment challenges:

- Air-gapped or intermittently connected networks

- Decades-old firmware with no vendor support

- Real-time performance requirements that limit scanning or patching

- Multi-vendor PLC fleets with inconsistent update workflows

These realities make it difficult to rely solely on patch management, network segmentation, or perimeter defenses.

5. Physical and Operational Consequences

Because ICS software interacts directly with physical equipment, software vulnerabilities can lead to:

- Manipulation of process parameters

- Shutdown of production lines

- Damage to equipment or the environment

- Safety incidents impacting human operators

Software risk in ICS is therefore both digital and physical, with potentially severe outcomes.

Three Steps to Deploy RunSafe in Existing ICS Security Programs

Given the depth of software risk in modern ICS environments, organizations need solutions that both reduce exploitability and produce the evidence required for rising compliance standards.

RunSafe delivers this by integrating directly into existing development and maintenance workflows, making it possible to improve security posture without operational disruption.

1. Integrate & Automate SBOM Generation at Build Time

Begin by embedding RunSafe’s SBOM generation directly into your CI/CD pipeline or offline build environment. Whether you’re working with embedded Linux, Yocto/Buildroot builds, or legacy RTOS toolchains, RunSafe’s Identify capability produces CycloneDX-compliant SBOMs and supports all mandatory NTIA fields.

You’ll gain full component visibility—down to libraries, files, and versions, including proprietary components—so you can quickly assess exposure, audit supplier code, enforce license policy, and meet SBOM-mandate requirements for ICS environments.

2. Apply Binary Hardening and Runtime Protection

Protecting your software with RunSafe Protect is as easy as installing the packages from our repositories and making a one-line change to your build environment. Once installed, you can automatically integrate Protect into your existing build process.

RunSafe Protect hardens compiled binaries against memory-based exploits and zero-day attacks by applying Load-time Function Randomization (LFR). Even legacy PLC firmware, vendor-supplied binaries, or devices in air-gapped networks can benefit from exploit mitigation. Because the protection works independently of patch status, you’re reducing risk proactively while maintaining operational continuity.

3. Monitor Protected Devices

Deploy RunSafe’s Monitor capability across your hardened device fleet to capture crash indicators, detect unusual behavior patterns, and differentiate between benign failures and potential exploit attempts.

Next Steps to Secure Your ICS Environment

Securing industrial control systems requires more than perimeter defenses or periodic patch cycles. It demands protections that operate inside the software itself—across legacy devices, modern embedded platforms, and complex software supply chains.

RunSafe provides that foundation by hardening binaries against exploitation, generating accurate SBOMs at build time, and delivering operational insight through lightweight monitoring. Together, these capabilities give ICS operators a practical path to strengthen system integrity, reduce exploitability, and demonstrate compliance with the world’s most important cybersecurity standards.

With the right protections applied directly to the software running your critical processes, resilience becomes achievable rather than aspirational.

Request a consultation to get started with RunSafe or to assess your embedded software security and risk reduction opportunities.

FAQs About RunSafe and ICS Compliance

Yes. RunSafe hardens compiled binaries at runtime to let operators secure decades-old PLCs, RTUs, and embedded controllers even when patches are unavailable or unable to be applied.

Does RunSafe impact real-time performance or PLC scan cycles?

RunSafe takes an agentless approach to have very low impact and has been deployed in many resource-constrained environments successfully.

Can RunSafe be deployed in completely air-gapped ICS environments?

Yes. RunSafe supports offline licensing and local-only operations. All analysis, hardening, and SBOM generation can be performed inside secure, disconnected networks. This is particularly valuable for ICS environments with strict isolation requirements or regulatory prohibitions against cloud connectivity.

How does RunSafe help with IEC 62443 SR 3.4 and software integrity requirements?

IEC 62443 SR 3.4 requires mechanisms to prevent unauthorized modification or execution of software components. RunSafe delivers this by making memory-based exploits—including zero days—non-exploitable. Even if a vulnerability exists, exploit attempts fail, helping operators maintain software integrity even on unpatched or legacy systems.

Does RunSafe support NIST 800-82 and NERC CIP incident response and integrity controls?

Yes. RunSafe contributes to several core NIST and NERC CIP requirements:

- System and information integrity: Prevents unauthorized code execution by blocking exploit chains.

- Configuration and vulnerability management: SBOMs accelerate identification of impacted assets.

- Continuous monitoring: Crash analytics and exploit indicators support incident detection and reporting.

This helps operators produce clear, evidence-backed compliance documentation.

How does RunSafe support SBOM requirements in the EU Cyber Resilience Act and U.S. federal mandates?

RunSafe generates build-time SBOMs, capturing every component, including low-level C/C++ libraries and embedded dependencies often missed by scanning tools. RunSafe’s Identify capability produces CycloneDX-compliant SBOMs and supports all mandatory NTIA fields.

Can RunSafe help reduce zero-day exploitability in ICS or embedded software?

Yes. RunSafe’s patented Load-time Function Randomization defends software from memory-based zero days by altering the memory layout of an application each time it runs. This prevents attackers from leveraging memory-based vulnerabilities, such as buffer overflows, to attack a device or gain remote control.

How does RunSafe differ from network-based ICS security tools?

Network tools (IDS, DPI, segmentation) detect or contain attacks, but they cannot prevent exploitation inside the device. RunSafe operates within the software itself, transforming binaries so they cannot be exploited even if the attacker reaches the device or bypasses perimeter defenses. It complements—not replaces—existing ICS security layers by addressing the root of software exploitability.

What types of ICS platforms and RTOS environments does RunSafe support?

RunSafe supports a broad range of ICS platforms, including: VxWorks, QNX, Yocto, Buildroot, Linux, Bare Metal, and more. View a full list of integrations and supported platforms here.

The post Meeting ICS Cybersecurity Standards With RunSafe appeared first on RunSafe Security.

]]>The post Safety Meets Security: Building Cyber-Resilient Systems for Aerospace and Defense appeared first on RunSafe Security.

]]>In this environment, a software glitch, a failed component, or a cyber intrusion can have the same catastrophic impact: a system that doesn’t behave as intended when lives and missions are on the line.

Patrick Miller, Product Manager at Lynx, has spent his career at the intersection of safety, security, and performance, working across aerospace, defense, enterprise cloud, and embedded systems.

In this Q&A, Patrick shares how architecture, separation, and long-term thinking can help engineers and product teams design resilient weapons systems.

Listen to the Audio Overview

1. You have a unique background that spans aerospace, defense, enterprise cloud, and now safety-critical embedded systems. What lessons from those different domains help you approach safety and security in critical systems today?

Patrick Miller: The biggest lesson is that every domain taught me something different about risk. Security is as much about governance and auditability as it is about technical controls. In defense and aerospace, I learned that resilience isn’t just about preventing failures, it’s about designing systems that degrade gracefully when something does go wrong.

But the connective tissue across all these domains is, particularly in product management, you must know your customer’s actual need, not just the problem you think you’re solving.

Early in my career, I realized that the “why” behind a security requirement often matters more than the requirement itself.

2. Safety and security are often treated as separate priorities. How do you see them intersecting, particularly in aerospace and defense?

Patrick: They are not separate priorities but two expressions of the same goal: keep the system doing what it’s supposed to do, when it’s supposed to do it, and in the face of adversity.

Safety asks, “What happens when things might fail?”

Security asks, “What happens when someone tries to make them fail?”

In aerospace, especially, legacy systems were designed in an era when the threat model was simple: physical tampering or insider threat. Now we have connected avionics and software-defined platforms with attack surfaces we didn’t have to think about ten years ago.

A safety failure and a security failure can look identical from the flight deck, and both result in the aircraft not doing what the crew intended. The intersection is in architecture. If you design a system with strong separation, say at the kernel level, between safety-critical functions and everything else, you’re solving for both.

3. The industry is moving toward software-defined platforms in aircraft and defense systems. What new risks does this introduce—and what opportunities to improve resilience?

Patrick: A key risk is that a vulnerability in one aircraft or subsystem can theoretically affect an entire fleet. However, this shift also creates the opportunity to build security and resilience from day one, rather than bolting it on afterward.

When the industry is trying to add security to 20-year-old real-time operating systems to modernize embedded platforms for customers, it’s like retrofitting a house with a new foundation. Software-defined systems let you architect with separation, modularity, and defense-in-depth from the start. You can implement zero-trust and cyber-resilience principles in real-time environments in ways you couldn’t with monolithic systems.

4. Aerospace and defense systems often have very long lifecycles. What challenges does that create for sustaining cybersecurity, and how can architecture choices mitigate some of the risks?

Patrick: This is one of the most complex problems in the industry. A crewed aircraft certified today will probably still be flying forty years from now, just as there are crewed aircraft certified forty years ago still flying today. By then, the threat landscape will have evolved dramatically. Cryptographic algorithms considered secure today may be obsolete in a post-quantum world.

The mitigation is architectural resilience. First, design with modularity so that security updates can be applied surgically to vulnerable components without recertifying the entire system. Second, implement strong separation so that compromising one module doesn’t cascade through the entire aircraft. Finally, at the program level, it means thinking about your supply chain and third-party dependencies not as static decisions, but as ongoing risk management.

5. UAVs and other unmanned systems are becoming disposable assets in some missions. How does that change the calculus of how much safety vs. security you embed at the system level?

Patrick: The step-change in the evolution of UAVs flips the traditional paradigm on its head. With a crewed aircraft, safety is paramount because at least one, or more likely, many human lives are at stake. In a contested environment, the calculus is different for a single-use tactical UAV. You might accept a higher technical risk if it means fielding new capabilities faster.

Here’s where I push back on the “disposable” framing: even if the platform is disposable, the capability often isn’t. If an adversary captures and reverse-engineers your UAV, they can gain insights into your tactics, sensors, and comm architecture, which requires constant iteration to stay ahead. So even for “disposable” systems, I think about: What’s worth protecting architecturally?

You can design a UAV that’s tactically expendable but still prevents an adversary from extracting intelligence or spoofing commands.

6. How can teams modernize embedded platforms without introducing unacceptable risk or performance tradeoffs?

Patrick: This is where data-driven prioritization really matters. The temptation is always to boil the ocean: add every new security feature, refactor the entire architecture, and implement the latest standards. Instead, measure the opportunity cost of each modernization decision. What are my top three security gaps today? What are my top three performance bottlenecks? Which modernization efforts address both?

Implementing strong separation boundaries improves real-time performance by preventing one task from blocking another, particularly in multi-core processors. At a practical level, invest in your DevSecOps pipeline early and institute automated testing, static analysis, and security scanning to build confidence to modernize faster.

7. Lynx emphasizes separation kernels and mixed-criticality architectures. What does “designing for separation” mean in practice, and how does it help balance safety and cybersecurity in real-time environments?

Patrick: “Designing for separation” means treating compartmentalization as a primary architectural concern, not an afterthought. It’s the difference between saying “we’ll secure the perimeter and hope nobody gets through” and saying “someone eventually gets through, here are the north-south and east-west limits that prevent further intrusion, here is how we know the threat actor entered and how to neutralize them.”

In practice, that means defining your trust boundaries early. What functions are safety-critical? What modules are network-connected? What functions are mission-critical but not safety-critical? A separation kernel enforces those boundaries between partitions at the hypervisor level: one partition can’t access another’s memory; one partition can’t interfere with another’s timing. In real-time systems, timing is paramount, so this isolation protects both safety and security simultaneously.

8. What emerging standards or regulations do you anticipate having the greatest impact on how manufacturers secure and certify safety-critical systems?

Patrick: The Department of Defense’s Software Fast-Track initiative is pushing contractors to adopt modern DevSecOps practices and accelerate secure software delivery timelines. We’re also watching how NIST 800-53 and 800-171 requirements cascade down through the supply chain, forcing even smaller tier-two and tier-three suppliers to implement rigorous security controls.

The Software Bill of Materials (SBOM) mandate is particularly interesting because it’s forcing manufacturers to have real visibility into their software dependencies, which is foundational for long-term supply chain security.

On the civil aviation side, DO-326a and DO-356 are pushing the industry from a compliance-checkbox approach toward continuous monitoring and threat assessment throughout the aircraft lifecycle. Zero-trust mandates across both defense and critical infrastructure are also driving architectural changes at the platform level, which aligns well with what we’re building at Lynx.

9. You’re both a technologist and a pilot. From an operator’s perspective, what would “built-in cyber resilience” mean to you sitting in the cockpit?

Patrick: It means I can trust the displays and controls to follow my inputs and trust the instruments. It means that if there’s a compromise somewhere in the system or sensor, it fails safely, perhaps with a display warning, but not with an unexpected output from the aircraft. A pilot already has enough mental load as it is and does not want to have to think or worry about cybersecurity while flying.

There’s a saying: “aviate, navigate, communicate.” Built-in resilience means the architects and engineers did their job right so that cybersecurity is invisible to me, a redundancy so the aircraft operates reliably.

10. If you could leave engineers and product teams working in critical infrastructure with one key takeaway about building secure and safe systems, what would it be?

Patrick: Know your customer’s actual problem, not just the requirement they gave you. Sometimes the requirement is a proxy for something deeper. Sometimes the customer doesn’t even know how to articulate it yet. For me, that means talking directly with customers, attending industry conferences, asking hard questions, and actively listening.

What’s your threat model today? How are you thinking about long-term sustainability? What architecture decisions are constraining you? Be honest about tradeoffs. You can’t optimize for everything, but if you understand your customer’s actual priorities, you can make product feature choices that strike the right balance.

Designing for Safety, Security, and Longevity

In aerospace and defense systems, safety and security directly overlap. The same design choices that protect flight safety also determine cyber resilience. Architectural separation, modularity, and supply chain transparency are prerequisites for survivability in the digital battlespace.

RunSafe Security and Lynx have partnered to advance this mission through technical collaboration. The integration of LYNX MOSA.ic and RunSafe Protect delivers the industry’s first DAL-A certifiable, memory-safe RTOS platform, uniting safety, security, and operational efficiency in a single solution.

Read our joint white paper for more on this partnership: Integrating RunSafe Protect with the LYNX MOSA.ic RTOS

The post Safety Meets Security: Building Cyber-Resilient Systems for Aerospace and Defense appeared first on RunSafe Security.

]]>The post The RunSafe Security Platform Is Now Available on Iron Bank: Making DoD Embedded Software Compliant and Resilient appeared first on RunSafe Security.

]]>The challenge for defense programs is that meeting these requirements, particularly for embedded systems, often results in increased labor and difficulty in getting tools approved and deployed.

That’s why we’re excited to share that the RunSafe Security Platform is now available on Iron Bank, the DoD’s hardened repository of pre-assessed and approved DevSecOps solutions.

As a verified publisher, RunSafe provides DoD software development teams with access to Software Bills of Materials (SBOM) generation, supply chain risk management (SCRM), and code protection through an ecosystem they already trust.

Why Iron Bank? A More Secure Path

Iron Bank is built to help defense programs quickly deploy new tools without spending months navigating approval processes. Every product listed on Iron Bank goes through rigorous security assessments, container hardening, and compliance validation. Because the containers are scanned daily for vulnerabilities, DoD teams gain access to resilient tools that keep the software supply chain secure and get software to deployment faster.

With RunSafe listed as a verified publisher, DoD teams and integrators can now pull down the platform directly from Iron Bank, making it easier for defense programs to integrate and use.

What RunSafe Brings to Iron Bank

The RunSafe Security Platform addresses some of the toughest challenges in embedded software security. Here’s what you can access through Iron Bank:

C/C++ SBOM Generation

RunSafe provides the authoritative build-time SBOM generator for embedded systems and C/C++ projects. Automating SBOM generation is critical for meeting DoD requirements, especially for unstructured C/C++ code where traditional SBOM tools fall short.

Supply Chain Risk Management

SCRM capabilities enable DoD teams to take action, not just generate a static SBOM. Teams can monitor for new vulnerabilities and check license enforcement and provenance. With a complete, correct SBOM, teams can implement required SCRM practices.

Runtime Code Protection

RunSafe hardens binaries against exploitation through moving target defense (e.g. Runtime Application Self Protection (RASP)), defending weapons systems at runtime to increase resilience. This resilience applies to future zero days as well, providing fielded weapon systems protection between software upgrade cycles that can be two years apart.

Accessing the RunSafe Security Platform on Iron Bank

If your program is working to modernize DevSecOps practices, automate SBOM generation, or secure embedded systems without code rewrites, RunSafe is now available directly on Iron Bank.

You can find our containers by logging in and accessing the Iron Bank repository here.

Request a consultation to learn more about the RunSafe Security Platform and Iron Bank.

The post The RunSafe Security Platform Is Now Available on Iron Bank: Making DoD Embedded Software Compliant and Resilient appeared first on RunSafe Security.

]]>The post From Black Basta to Mirth Connect: Why Legacy Software Is Healthcare’s Hidden Risk appeared first on RunSafe Security.

]]>Key Takeaways:

- Legacy medical devices running old code create growing cybersecurity and patient safety risks

- Ransomware attacks on hospitals show how downtime directly impacts clinical care

- Security transparency and SBOMs are now key to winning healthcare procurement deals

- Cyber resilience—not just compliance—will define the next era of connected healthcare

Hospitals and medical device manufacturers are facing a quiet crisis rooted not in cutting-edge exploits or nation-state hackers, but in old software.

Across healthcare, legacy code is turning routine cybersecurity weaknesses into real-world patient safety risks. The problem is simple to explain and hard to solve: devices built to last for decades are now connected to modern networks, yet run on outdated and difficult-to-patch code.

As connected devices become the norm, this technical debt has become a liability that extends to patient care.

In a discussion on the business realities of medical device cybersecurity, Shane Fry, CTO of RunSafe Security, Patrick Garrity, Security Researcher at VulnCheck, and Phil Englert, VP of Medical Device Security Health-ISAC, discussed the vulnerability and compliance landscape and where software security comes into play.

Watch the full webinar for more on medical device cybersecurity here.

When “Forever Devices” Become Forever Vulnerable

A medical device can remain in use for 15-20 years. That longevity might make sense for hospitals managing costs, but it means the software inside those devices is often frozen in time. Meanwhile, the threat landscape moves forward.

“These devices can be used for decades,” said Patrick Garrity, Security Researcher at VulnCheck. “That becomes a real challenge. Manufacturers have to be mindful of that.”

Imagine a connected infusion pump or imaging system that still relies on a Windows 7 or even XP base. Patches stop, drivers go unsupported, and over time, the device becomes a soft target on an otherwise modern network.

And because medical systems are tightly integrated—feeding data into hospital EMRs, remote dashboards, and cloud platforms—an outdated component in one corner of the network can expose an entire healthcare operation.

When Cyber Weaknesses Become a Patient Safety Risk

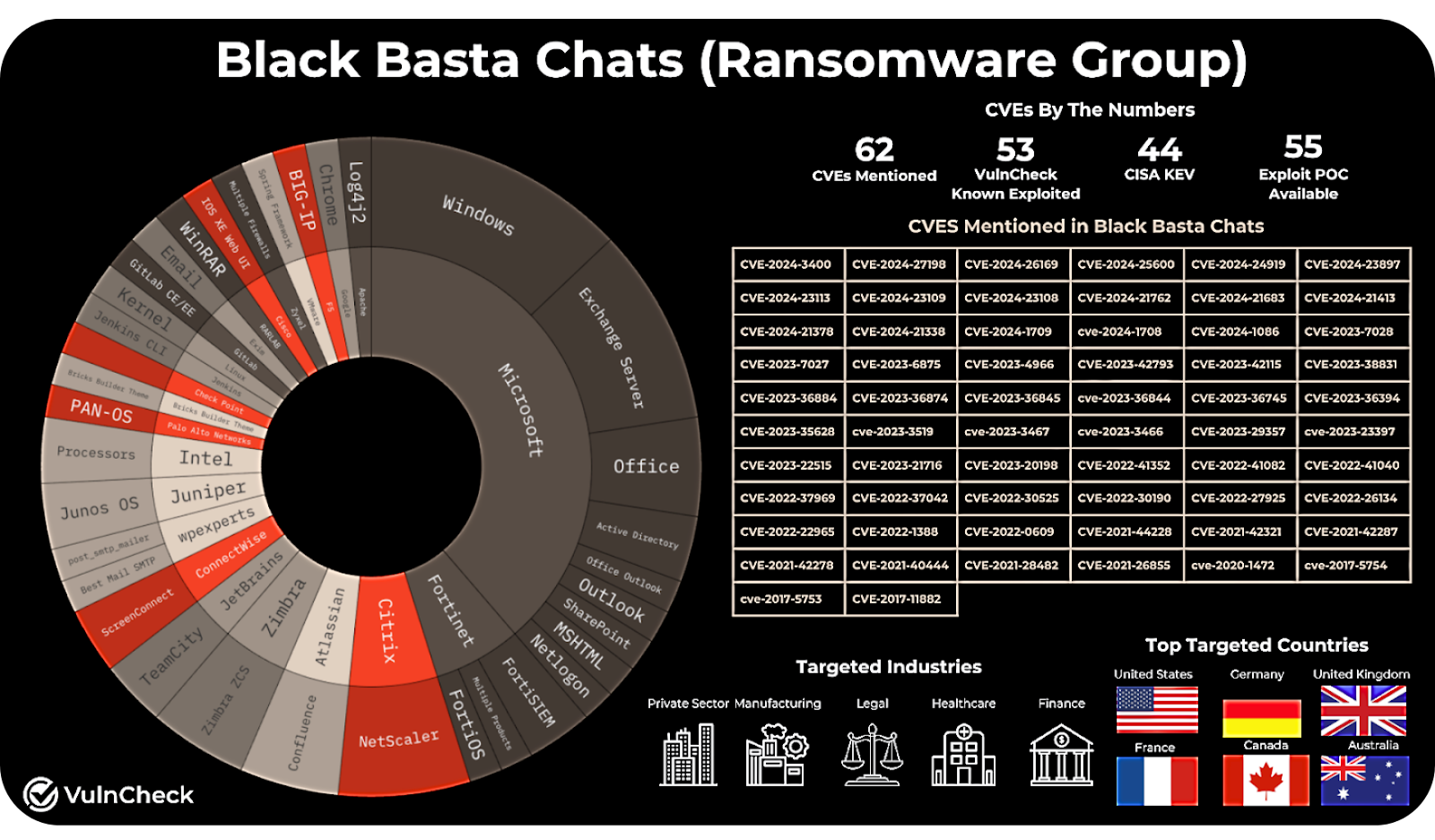

The stakes became clear during the Black Basta ransomware attack on Ascension Health earlier this year. Hospitals were forced to revert to paper-based systems. Electronic medical records, scheduling systems, and digital imaging were suddenly inaccessible.

RunSafe Security CTO Shane Fry summed up the real-world impact: “If your network’s down, you can’t do surgery.”

Beyond the immediate operational disruption, the consequences for patients were serious. Doctors faced delays accessing treatment histories. Pharmacists couldn’t verify prescriptions electronically. In some facilities, even infusion pumps and lab equipment had to be taken offline as a precaution.

Ransomware may be the headline, but the underlying vulnerability is the same—cybersecurity weaknesses left unaddressed.

As Phil Englert, VP of Medical Device Security at Health-ISAC, noted: “Cyber is a failure mode. It’s a way for things not to work or not to work as intended when you want them to.”

When software failures and weak security controls ripple into care delivery, cybersecurity is a patient safety imperative.

Where Exploitation Starts

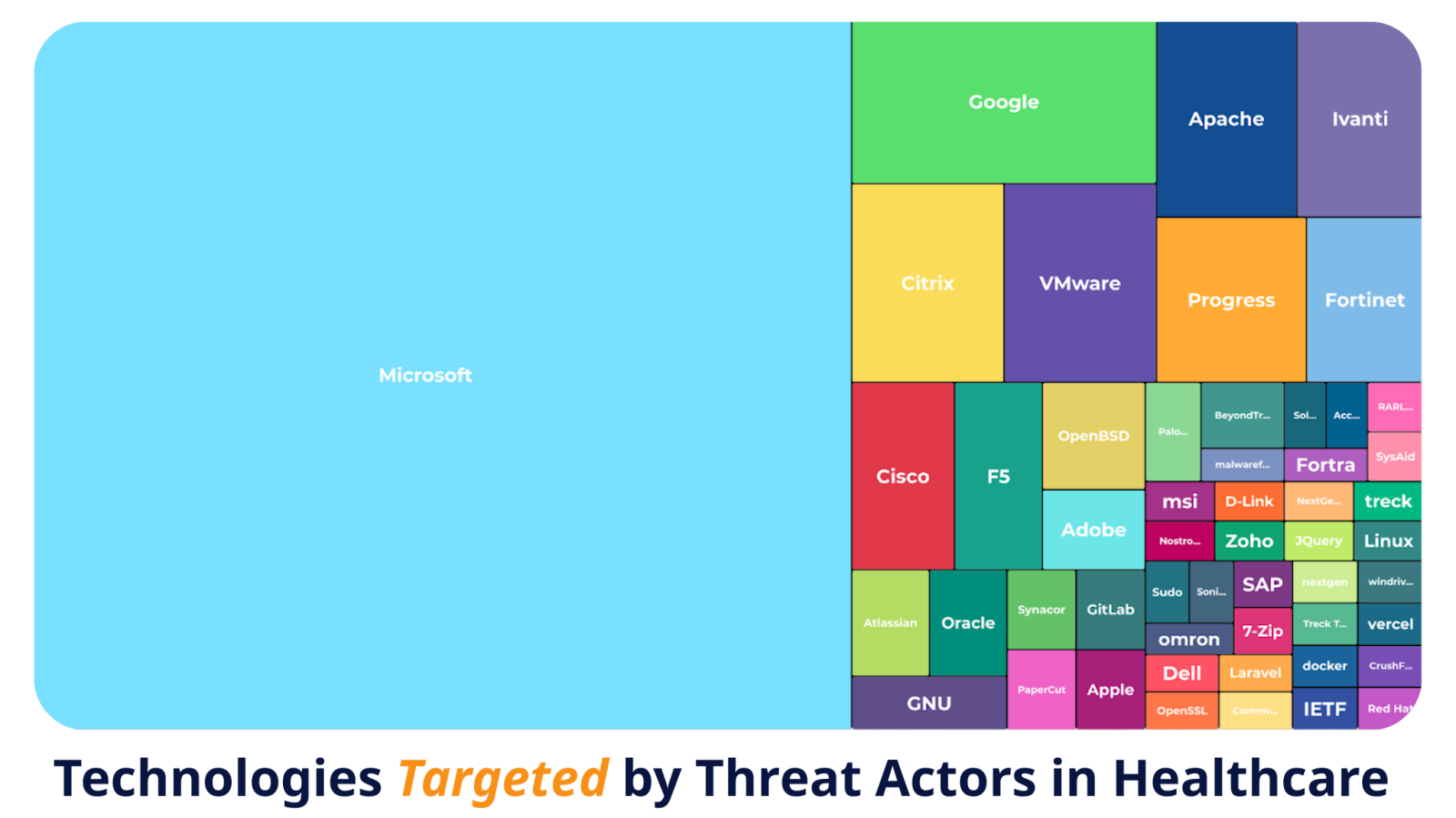

Most healthcare breaches don’t start with exotic zero-days. They start with vulnerabilities everyone already knows about.

Attackers target what’s common: outdated Microsoft servers, unpatched remote access tools, misconfigured network gateways, and open-source components left to age quietly inside medical devices.

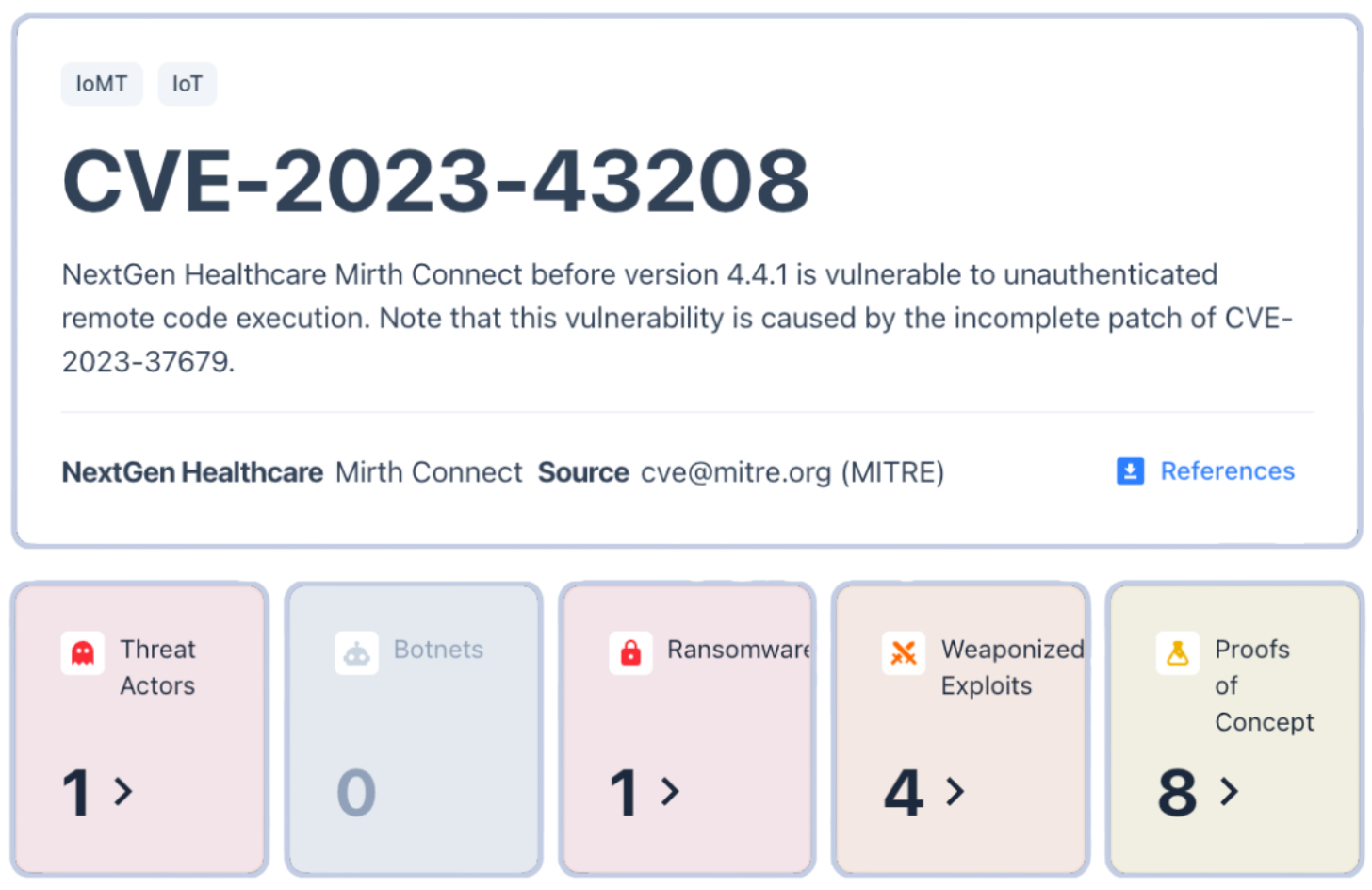

Garrity pointed to examples such as NextGen Healthcare’s Mirth Connect, a popular data exchange system exploited in ransomware campaigns. The flaw wasn’t obscure, as it had been publicly documented and patched. Yet more than a year later, vulnerable systems remained exposed online, still running unpatched versions.

“Threat actors are going to opportunistically target anything and everything. And… they’re just using what’s already published and off-the-shelf,” Garrity said. “Even outdated remote management tools or cloud connectors can become attack surfaces.”

Legacy software turns these well-known weaknesses into long-term liabilities. Once a system goes unpatched, every new connection—every piece of cloud integration or remote monitoring—adds to the risk.

The Business Risk of Standing Still

The consequences of cybersecurity weaknesses aren’t limited to downtime or headlines—they directly affect revenue and market access.

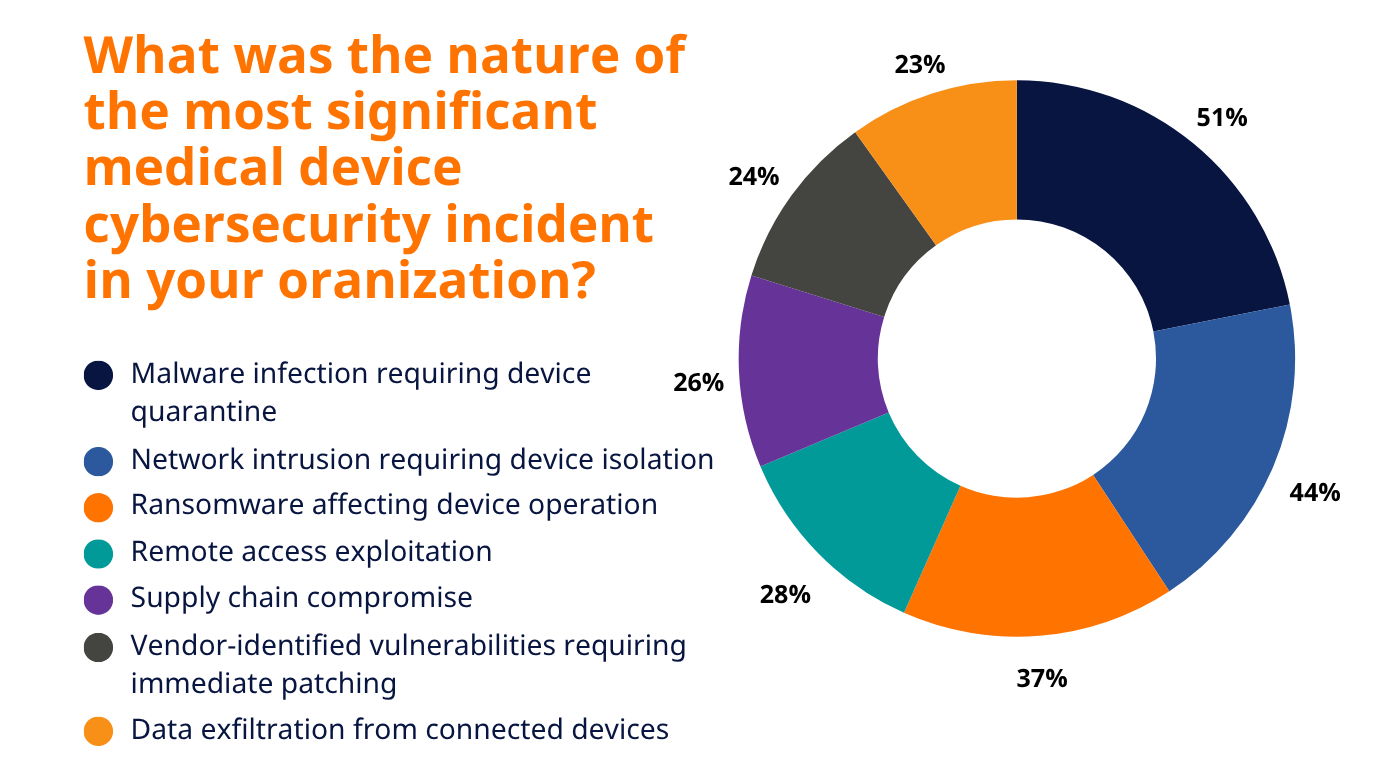

According to RunSafe Security’s 2025 Medical Device Cybersecurity Index, 83% of healthcare buyers now include cybersecurity standards in their RFPs, and 46% have declined to purchase medical devices due to security concerns. Outdated or insecure software doesn’t just pose a technical problem; it can cost sales.

For device manufacturers, the message from buyers is unmistakable: security maturity equals market readiness. Procurement teams are treating cybersecurity posture as a business criterion alongside clinical performance and cost.

Hospitals, too, are taking notice. Many are implementing procurement checklists requiring vendors to provide Software Bills of Materials (SBOMs), vulnerability response plans, and clear lifecycle support documentation. Without those, even innovative technologies struggle to clear the contracting stage.

Modernizing Security for Long Device Lifespans

Managing legacy code in a regulated, high-stakes industry isn’t easy, but it’s not impossible. The most resilient organizations are taking pragmatic, layered steps to reduce risk without overhauling every device.

1. Build-Time SBOMs

Create and maintain Software Bills of Materials (SBOMs) during the build process, not after. This ensures visibility into every dependency and allows for continuous monitoring of vulnerabilities over time.

2. Exploit-Based Prioritization

Focus patching on vulnerabilities with known exploitation in the wild, not just those with high CVSS scores.

3. Compensating Controls

Where patches aren’t possible, use segmentation, strict access controls, and runtime protections to reduce exposure.

4. Design for the Next Decade

Reserve processing and storage capacity for future updates and plan for cryptographic agility so devices remain secure over their full lifespan.

5. Transparent End-of-Life Policies

Communicate openly about support timelines and risk mitigation options. Buyers and regulators increasingly view transparency as part of good cybersecurity hygiene.

From Compliance to Resilience

Healthcare is shifting from a “check-the-box” approach to one centered on resilience. Regulators are reinforcing that shift: the FDA’s premarket guidance now requires SBOMs and vulnerability management plans, while the EU’s Cyber Resilience Act pushes similar expectations globally.

The result is a new baseline where cyber hygiene and secure design aren’t just best practices, they’re business necessities.

“If you don’t know what’s in the software you’re deploying to your networks, then how can you know that a vulnerability affects you?” Fry said. “Without that Software Bill of Materials, you’re going to be very limited.”

For manufacturers and healthcare providers alike, addressing legacy code is about security and trust. It’s about maintaining operational continuity. And ultimately, it’s about keeping patients safe in a world where every connected device is part of the care equation.

As Fry put it: “Everything that we should be doing in cybersecurity should be viewed through … the lens of making sure the patient can get the best care they need as quickly as they can.”

For more on medical device challenges and defenses, listen to our panel discussion: From Ransomware to Regulation: The New Business Reality for Medical Device Cybersecurity.

The post From Black Basta to Mirth Connect: Why Legacy Software Is Healthcare’s Hidden Risk appeared first on RunSafe Security.

]]>The post Stopping Copyleft: Integrating Software License Compliance & SBOMs appeared first on RunSafe Security.

]]>Embedded engineering teams are aware of the risks and looking for tooling that surfaces license risk early in the development pipeline. RunSafe’s license compliance feature addresses this need by detecting licenses in your code and enforcing your organization’s risk profile to prevent the release of affected code. Teams can ship faster, with the permissions they’ve set, and without risking IP.

Listen to the Audio Overview

Why License Compliance Is Harder for Embedded Systems

Software license compliance means following the legal terms attached to every piece of code in your product, including proprietary, open source, or vendor-supplied. When you ship a device with firmware or deploy a software update, you’re accepting the obligations tied to every component inside.

Embedded teams face unique challenges:

- Heavy static linking: Increases likelihood of triggering copyleft obligations

- Long device lifecycles (10–20 yrs): Mistakes persist for years and can’t be easily fixed

- Mixed vendor + OSS inputs: Hard to track attribution and inheritance

- No package manifests in C/C++: License detection becomes manual and error-prone

How Copyleft Licenses Raise Compliance Stakes

Copyleft is a licensing approach that uses copyright law to keep software open. If you distribute a program containing copyleft-licensed code, you’re typically required to release your modifications—and sometimes related components—under the same copyleft license. That reciprocal obligation separates copyleft from permissive licenses.

If a copyleft license is violated, the potential implications include:

- Mandatory source code disclosure: Courts can order you to release not just the copyleft component but also your modifications, build scripts, and sometimes the proprietary code you linked against.

- Increased risk of exploitation once code is public: Once your source code is released, it makes it easier for malicious actors to analyze and exploit any latent vulnerabilities. Embedded devices are already at risk, the last thing teams want is to accelerate exploit development. (While the goal is to prevent reaching this point through license compliance, if a team finds itself in this situation, RunSafe Protect hardens binaries against exploitation, even when source code is exposed.)

- Legal action and settlements: License holders can seek injunctions to halt product sales, plus damages and legal fees that quickly reach six or seven figures.

- Product recall costs: Fixing a license violation after devices ship may require firmware updates, replacement units, or new packaging with proper notices.

- Reputation damage: In regulated industries like medical devices or defense, license violations signal weak governance and can disqualify you from contracts.

- Supply chain disruption: Customers may freeze orders until you demonstrate compliance, and certification bodies may suspend approvals.

The longer violations exist in shipped products, the more devices are affected and the harder remediation becomes.

How Does Copyleft Happen? A Common Scenario

- A developer adds a library to meet a deadline

- The project statically links against a GPL component

- No manifest exists or an SBOM lacks the detail needed for tooling to flag the risk

- Firmware ships

- A customer, auditor, or regulator requests the SBOM

- Legal discovers the violation, and remediation becomes painful and expensive

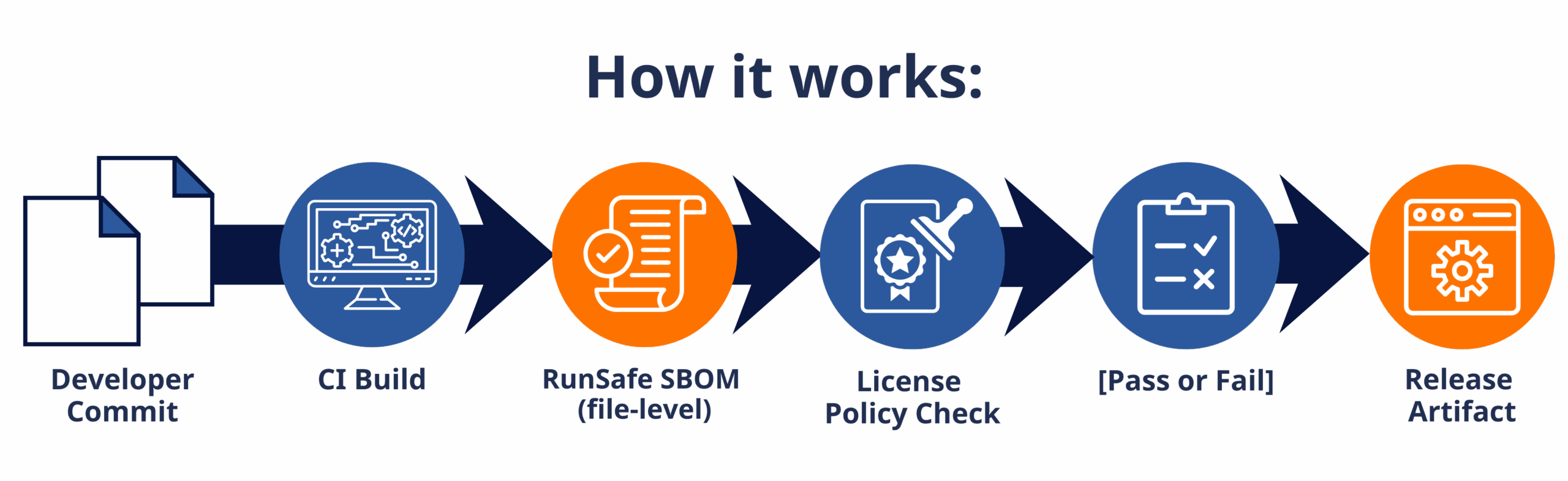

Without an accurate SBOM and automated license enforcement, it’s difficult to stop copyleft from entering your codebase. That’s why embedded teams need RunSafe’s file-level SBOMs and license compliance, which surface licenses early and then allow you to block or approve them before release based on your specific risk profile.

How RunSafe Improves License Compliance and Addresses Copyleft Risk

RunSafe’s license compliance feature gives embedded teams control over licenses to prevent violations before code ships. We combine build-time Software Bill of Materials (SBOM) generation with automated policy enforcement to simplify and standardize the process.

Step 1: Set Your Organization’s License Rules

RunSafe lets you define clear licensing policies across your entire organization, and will be adding support for project-level license compliance to allow for more granularity and flexibility in how you configure your rules. Specify which licenses are approved, which are banned, and which require review. Whether you need to block GPL variants, flag AGPL dependencies, or restrict any copyleft terms, RunSafe allows you to set rules that make sense for your organization.

In the RunSafe Security Platform, you’ll see a list of all the licenses in your software detected by RunSafe’s build-time SBOM generator. You can also view a list of common open-source licenses and choose which to allow or deny. By defaulting to the licenses actually present in your software’s SBOM, your organization can focus on dependencies in use without getting bogged down by unnecessary compliance reviews.

Step 2: Choose Your Enforcement Posture for New Licenses

This is where RunSafe balances control with practicality. For any license you haven’t explicitly classified (unset licenses), you choose one of two approaches:

Allow by default: New dependencies flow through automatically unless they match your explicitly denied list. This keeps development moving while blocking known copyleft risks.

Deny by default: Any unrecognized license halts the pipeline until you review and approve it. This guarded posture ensures maximum protection as your dependencies evolve.

Step 3: Enable Automatic Pipeline Enforcement and Alerts

Once configured, enforcement happens automatically in your CI/CD pipeline. As your CI tool runs your builds, RunSafe generates SBOMs and checks them against your license policy. Pipelines containing denied licenses will fail with clear output in your logs, identifying exactly which licenses triggered the block.

Step 4: Adapt as Your Dependencies Grow

As your team adds new libraries or updates existing ones, newly detected licenses automatically appear in your unset list. Depending on your enforcement posture, they either flow through (if allowed by default) or stop the pipeline for review before releasing code (if denied by default). You can adjust individual license decisions at any time, moving them between allowed and denied as your policy matures.

SBOMs: The Prerequisite for License Enforcement

You can’t enforce license policy if you don’t know what’s in your build. SBOMs solve that, but most SBOM tools fail in C/C++ environments because license data lives in:

- File headers

- Top-level LICENSE files

- Repository metadata

- Vendor drop-ins with no manifest at all

Without file-level SBOM accuracy, compliance becomes guesswork. This is where RunSafe differentiates itself. By generating SBOMs at the file level during build-time, RunSafe can accurately capture license information for embedded projects. This then leads to greater confidence in license compliance.

Protect Your Proprietary Code

By enforcing license policy at build time and pairing it with accurate SBOMs, you can reduce copyleft risk before it reaches production.

Interested in giving it a try? Sign up for a free trial of the RunSafe Security Platform.

FAQs About Copyleft License Compliance

Sometimes, but it depends on the license. LGPL permits dynamic linking with conditions, while GPL remains ambiguous. For embedded systems—which overwhelmingly use static linking—copyleft risk is much higher.

How often should SBOMs be generated?

Every build. Automating SBOM generation ensures accuracy as dependencies change.

What tools can prevent GPL or copyleft code from entering my firmware build?

Look for tools that generate accurate SBOMs at build-time and enforce license policy in the CI/CD pipeline. The most effective solutions automatically flag or block high-risk licenses before code is merged or released. RunSafe provides this capability by combining file-level build-time SBOMs with pipeline enforcement for embedded projects.

How do I automatically enforce open-source license policies in CI/CD?

You need pipeline-level enforcement, not manual reviews. Modern tools can apply license rules (allow, deny, or review) and stop risky code from reaching release or merge. RunSafe integrates directly into your CI/CD pipeline, ensuring that disallowed licenses never reach release branches or production firmware.

How can I detect licenses in C/C++ code when there’s no package manifest?

Most scanners depend on manifests and package metadata, which C/C++ projects often lack. Instead, you need file-level detection that reads license headers and repository artifacts. RunSafe’s build-time SBOM generator does exactly this, making license visibility possible even in C/C++ codebases.

How can I block copyleft without slowing down developers?

Choose a tool that supports both “allow-by-default” and “deny-by-default” modes. That allows developers flexibility for a fast flow in most work and strict control when needed. RunSafe supports both, so teams can balance velocity and risk.

How can I automate GPL license compliance for firmware?

Automation requires two layers: (1) license detection via build-time SBOMs, and (2) policy enforcement in CI/CD. When these steps are automated, teams avoid manual review and prevent GPL from slipping into release artifacts. RunSafe delivers both in a single workflow.

How do I enforce open-source license rules in GitHub or GitLab CI?

Use a tool that integrates into your CI/CD pipeline and can block merges or releases based on your set license policies. RunSafe ties directly into GitHub and GitLab CI pipelines so enforcement happens automatically with each build.

The post Stopping Copyleft: Integrating Software License Compliance & SBOMs appeared first on RunSafe Security.

]]>The post The Decade Ahead in Aerospace Cybersecurity: AI, Resilience, and Disposable Weapons Systems appeared first on RunSafe Security.

]]>Key Takeaways:

- AI will transform aerospace cybersecurity, helping find vulnerabilities faster but also creating new ones

- Collaboration among government, primes, and vendors is needed to protect connected systems

- Disposable and low-cost UAVs still require strong security to ensure mission success

- Cyber resilience and modular architectures will define the next decade of secure aerospace operations

The aerospace and defense sector is entering a new chapter. With networked systems, distributed architectures, and mission-critical connectivity, cybersecurity is as vital as physical shielding.

In a recent discussion on aerospace cybersecurity strategy, Shane Fry, CTO of RunSafe Security, and Patrick Miller, Product Manager at Lynx, discussed how the next five to ten years will reshape how we defend aerospace assets.

What follows are four key trends that highlight where the industry is headed.

Watch the full webinar for more on aerospace cybersecurity here.

1. AI’s Dual-Edged Role in Cyber and Operations

Artificial intelligence is emerging as both a revolutionary tool and a potential liability in aerospace cybersecurity. Shane noted how AI is reshaping nearly every aspect of defense systems, from vulnerability detection to operational optimization.

“There’s a lot of really cool research being done in penetration testing and finding vulnerabilities and using AI to assist operators in security,” he said.

AI is now helping engineers and analysts identify weaknesses faster and automate portions of cyber defense previously handled manually. But as Shane pointed out, the same technology that accelerates innovation can also magnify risks.

“One of the things that is a negative,” he warned, “is many of these new capabilities are trained on software that’s not secure.”

Large language models and AI code-generation tools often learn from open-source repositories riddled with known flaws. That means they might produce software that appears sound but hides vulnerabilities—like memory corruption or buffer overflows—deep within the codebase.

“We’re going to see a rise in software vulnerabilities,” Shane predicted, “as more developers use these code-assistants to produce faster code that looks good but may actually have subtle memory corruption vulnerabilities in them.”

For a sector where software directly underpins mission safety and national defense, that’s a sobering reality. The next phase of AI integration, Shane cautioned, may bring turbulence before it delivers real progress.

“The next six months to a year or two years might be really rough,” he admitted, “but ultimately, the progress will be for the overall good.”

2. Disposable Weapons & UAVs: Security at Speed and Scale

One of the most striking shifts Shane highlighted was the rise of low-cost, “disposable” weapon systems, particularly unmanned aerial vehicles (UAVs). In a time where speed and affordability are driving procurement decisions, these assets are designed to complete their mission, but not necessarily to return.

“When we talk about disposable UAVs,” Shane explained, “there’s a lot of interest in having lower-cost solutions that we don’t care if they survive the mission. We just need them to accomplish the mission.”

That philosophy is reshaping how system owners think about design and risk. Yet, Shane cautioned that the push for cheaper, faster production can come at a dangerous price.

“As we strive to cut as much as we can to bring costs down,” he said, “we’ve got to make sure that we’re still doing enough security, enough safety so that we can accomplish the mission.”

In a world where digital compromise can have physical consequences, even a minor software flaw could be catastrophic. “We don’t want to end up in a situation where a UAV flying overhead has a trivial vulnerability that gets exploited, and the drone turns around and bombs an allied target,” Shane said.

That vivid example underscores a growing tension in modern defense programs: how to balance affordability and agility with assurance and control. The Department of Defense’s efforts to modernize its software approval and certification processes for future readiness will be critical.

A core component of that, Shane noted, is “having good, accurate SBOMs and being able to understand what the risk is in your software that you’re shipping.”

Additionally, this will help ensure that even low-cost or disposable systems can be deployed responsibly.

In short, the aerospace industry is entering an era where scale and security must coexist. Disposable systems may not be built to last, but their cybersecurity must endure long enough to protect the mission, the data, and the allies they serve.

3. Ecosystem Collaboration: Government, Primes & Vendors

Cyber defense of aerospace is not a solo endeavor. Governments, aerospace primes, and vendors will need to align to defend complex systems.

Shane observed that modern systems integrate legacy code, which is becoming increasingly interconnected, heightening risk. To manage this complexity, the industry is leaning into partnerships and certified deployment paths.

For example, Lynx’s secure hypervisor technology, when paired with RunSafe’s memory protection, delivers a layered, modular architecture that strengthens system isolation and resilience in the field.

When discussing future integration of AI, Patrick noted: “Lynx has taken the approach of enabling those safety-focused or non-critical applications to run alongside, but totally separate from, the safety-critical applications and subjects within that same hardware.”

This collaborative, layered approach is one example of working together to reduce overall risk.

4. Cyber Resilience: The Imperative for Operational Continuity

Overarching all of these trends is the principle of resilience. In an environment where AI may introduce new vulnerabilities, collaboration expands the ecosystem, geopolitics raises the stakes, and disposable systems proliferate, aerospace defenders must build platforms that can endure, adapt, and recover.

As Shane explained: “You’re going to get the most out of your hardware and your systems by having a more robust and modular system, with security baked in.”

He continued: “Having a modular system lets you get new software, new features, new capabilities onto your platforms faster.”

Patrick noted that the partnership model between Lynx and RunSafe demonstrates what “defense-in-depth” looks like in practice.

“With the Lynx and RunSafe partnership,” Patrick said, “pairing that with RunSafe’s memory protection, you’re able to remove a whole category of exploits you’d otherwise have to defend against.”

The Decade Ahead

The next decade for aerospace cybersecurity will be defined by convergence between AI and assurance, collaboration and software supply chain transparency, pre-emptive design and agile operations.

Building resilience and adopting safety-focused engineering will enable faster innovation without leaving cybersecurity by the wayside.

For more on RunSafe and Lynx’s work in aerospace cybersecurity, read our white paper on “Integrating RunSafe Protect with the LYNX MOSA.ic RTOS.”

The post The Decade Ahead in Aerospace Cybersecurity: AI, Resilience, and Disposable Weapons Systems appeared first on RunSafe Security.

]]>The post Defending the Factory Floor: How to Outsmart Attackers in Smart Manufacturing appeared first on RunSafe Security.

]]>That’s the question host Paul Ducklin explored with Joseph M. Saunders, CEO and Founder of RunSafe Security, in an episode of Exploited: The Cyber Truth.

From the limitations of traditional security models to the growing importance of software quality, this conversation revealed why the cybersecurity playbook for industrial automation needs a re-write for today’s threats.

The Challenge of Smart Manufacturing: Complexity Meets Connectivity

Modern factories are no longer isolated networks of robots and sensors. They’re deeply connected ecosystems, merging OT (Operational Technology) and IT, often with cloud integration and industrial IoT devices.

As Joe put it, “Connected devices bring productivity gains, but also new levels of security consideration.”

That connectivity has blurred the once-clear boundaries between factory floor systems and IT networks. Attackers can now exploit weak spots at every layer—from programmable logic controllers (PLCs) to human-machine interfaces (HMIs), SCADA systems, and even the cloud.

Why the Purdue Model Falls Short

The Purdue Security Model, long a foundation for industrial security, assumes clear segmentation between these layers. But in an era where a single sensor might communicate via Bluetooth, Wi-Fi, or cellular, those boundaries collapse.

Attackers no longer need “write access” to cause harm. Even read-only access—like viewing operational data or production schedules—can provide immense competitive or geopolitical advantage.

Example: Knowing how much of a certain alloy a manufacturer has in stock could reveal supply chain bottlenecks or production delays.

Compliance vs. Security: A Distinction that Matters

Too many organizations still approach cybersecurity as a checkbox exercise. Standards like IEC 62443, developed by the ISA and IEC, help guide secure industrial automation practices—but they’re only as good as the commitment behind them.

Joe cautioned that “checkbox compliance” misses the point. Security should be viewed as an extension of software quality and operational excellence.

Secure by Design Starts in Development

Building security into the software development lifecycle (SDLC)—from coding and testing to patching—is essential. Companies that automate and embed these processes don’t just produce safer software; they create better products, faster.

Extending the Life of Legacy Systems

Many industrial environments still rely on legacy systems with equipment that can’t easily be replaced or updated. These older devices, often running outdated firmware, represent some of the most vulnerable points in a factory’s network.

Memory Safety to the Rescue

Joe explained how memory safety protections can extend the secure life of legacy systems without requiring new software agents or hardware upgrades.

RunSafe’s Load-Time Function Randomization provides runtime protection that prevents exploitation even when patches aren’t available—adding security without disrupting operations.

“You can extend the life of legacy systems by applying memory safety protection in a way that doesn’t add software or slow them down,” Joe said.

That’s not just risk mitigation—it’s cost savings, uptime assurance, and long-term resilience.

Seeing the Whole Picture: From Software Supply Chain to Factory Floor

Securing smart factories also requires a focus on understanding the entire software supply chain. To protect devices, you need to know what software is built into each one.

Manufacturers, suppliers, and customers each play a role in ensuring the integrity of the products they build and deploy.

Joe recommends evaluating partners and suppliers based on:

- Governance and internal security policies

- Compliance with standards like IEC 62443

- Awareness of known threat actors and their tactics

- Transparency in Software Bill of Materials (SBOMs)

- Differentiation through resilient product design

RunSafe’s recent Medical Device Industry Report showed similar trends. Organizations are starting to reject insecure products altogether, even when they meet performance requirements. That mindset shift is now reaching industrial automation.

From “Good Enough” to Resilient by Design

The conversation ultimately returned to a core truth: Security isn’t about perfection—it’s about resilience.

When software is developed securely, vulnerabilities are reduced. When runtime protections are added, attackers are denied an easy path to exploit. And when organizations collaborate across the software supply chain, entire industries become more secure.

“Security isn’t a checkbox—it’s a reflection of quality,” Joe reminded listeners.

Take the Next Step: Secure Your Industrial Systems

RunSafe Security protects embedded software across critical infrastructure, delivering automated vulnerability identification and software hardening to defend the software supply chain and critical industrial systems without compromising performance or requiring code rewrites.

Learn more about how we do it in this case study: “Vertiv Enhances Critical Infrastructure Security for Embedded Systems with RunSafe Integration.”

The post Defending the Factory Floor: How to Outsmart Attackers in Smart Manufacturing appeared first on RunSafe Security.

]]>The post AI Is Writing the Next Wave of Software Vulnerabilities — Are We “Vibe Coding” Our Way to a Cyber Crisis? appeared first on RunSafe Security.

]]>For decades, cybersecurity relied on shared visibility into common codebases. When a flaw was found in OpenSSL or Log4j, the community could respond: identify, share, patch, and protect.

AI-generated code breaks that model. Instead of re-using an open source component and having to comply with license restrictions, one can use AI to rewrite a near similar version but not use the exact open source version.

I recently attended SINET New York 2025, joining dozens of CISOs and security leaders to discuss how AI is reshaping our threat landscape. One key concern surfaced repeatedly: Are we vibe coding our way to a crisis?

Listen to the Audio Overview

Losing the Commons of Vulnerability Intelligence

At the SINET New York event, Tim Brown, VP Security & CISO at SolarWinds, pointed out that with AI coding, we could lose insights into common third-party libraries.

He’s right. If every team builds bespoke code through AI prompts, including similar to but different than open source components, there’s no longer a shared foundation. Vulnerabilities become one-offs. If we are not using the same components, we won’t have the ability to share vulnerabilities. And that could lead to a situation where you have a vulnerability in your product that somebody else won’t know they have.

The ripple effect is enormous. Without shared components, there’s no community-driven detection, no coordinated patching, and no visibility into risk exposure across the ecosystem. Every organization could be on its own island of unknown code.

AI Multiplies Vulnerabilities

Even more concerning, AI doesn’t “understand” secure coding the way experienced engineers do. It generates code based on probabilities and its training data. A known vulnerability could easily reappear in AI-generated code, alongside any new issues.

Veracode’s 2025 GenAI Code Security Report found that “across all models and all tasks, only 55% of generation tasks result in secure code.” That means that “in 45% of the tasks the model introduces a known security flaw into the code.”

For those of us at RunSafe, where we focus on eliminating memory safety vulnerabilities, that statistic is especially concerning. Memory-handling errors — buffer overflows, use-after-free bugs, and heap corruptions — are among the most dangerous software vulnerabilities in history, behind incidents like Heartbleed, URGENT/11, and the ongoing Volt Typhoon campaign.

Now, the same memory errors could appear in countless unseen ways. AI is multiplying risk one line of insecure code at a time.

Signature Detection Can’t Keep Up

Nick Kotakis, former SVP and Global Head of Third-Party Risk at Northern Trust Corporation, underscored another emerging problem: signature detection can’t keep up with AI’s ability to obfuscate its code.

Traditional signature-based defenses depend on pattern recognition — identifying threats by their known fingerprints. But AI-generated code mutates endlessly. Each new build can behave differently and conceal new attack vectors.

In this environment, reactive defenses like signature detection or rapid patching simply can’t scale. By the time a signature exists, the exploit may already have evolved.

Tackling the Memory Safety Challenge

So how do we protect against vulnerabilities that no one has seen — and may never report?

At RunSafe, we focus on one of the most persistent and damaging categories of software risk: memory safety vulnerabilities. Our goal is to address two of the core challenges introduced by AI-generated code: